This is a self assessment. It is not balanced. It is not gentle. It is not here to validate your operating model, your org chart, or the deck you use to reassure executives. It exists to surface how you actually think about technology leadership when pressure arrives and incentives collide with reality.

Answer honestly. Not as the leader you describe in interviews. As the leader you become when systems fail, timelines slip, engineers disagree, and someone senior wants a simple answer to a complex problem.

This is written like a Cosmopolitan quiz for people who run technology at scale. Every option is phrased to sound reasonable, responsible, and professionally defensible. That is the point. The wrong answers are rarely stupid. They are seductive.

How to Score Yourself

🟢 Durable leadership – builds long term capability, speed, and resilience

🟡 Context dependent – reasonable sometimes, dangerous as a default

🔴 Fragile leadership – optimises for optics, control, and personal safety

After answering all questions, count how many 🟢, 🟡, and 🔴 answers you selected. Then read the interpretation at the end.

Questions

1. A Major Production Outage Happens at 02:00

Engineers are already working on it. Your first instinct is to:

A. Take command of coordination so decisions are fast and consistent

B. Stabilise stakeholders early so the business stays calm and aligned

C. Ask for impact, current mitigation, and what help the team needs, then get out of the way

D. Jump into the technical detail where you can add leverage immediately

2. An Engineer Pushes Back on Your Deadline

They say the timeline is unrealistic. You respond with:

A. Keep the date but ask the team to propose explicit tradeoffs to protect quality

B. Keep the date to preserve confidence, then work on increasing capacity or reducing friction

C. Re open assumptions together, quantify risk, and reset the plan transparently

D. Ask for options and let the team choose the best path so ownership stays with them

3. A Project Misses Its Delivery Date

Your immediate conclusion is:

A. Execution needs tightening, so you will introduce clearer routines and stronger follow through

B. Signalling failed, so you will improve reporting so leadership is never surprised again

C. The system failed, so you will examine scope, dependencies, architecture, and incentives

D. Sequencing failed, so you will revisit the plan to create a more credible delivery path

4. How Do You View Architecture?

Which best matches your belief?

A. Architecture is guardrails that keep teams safe and aligned at speed

B. Architecture is clarity: decisions recorded so people stop debating the same things

C. Architecture is the constraint system that determines how cheaply you can change

D. Architecture is enablement: it should remove friction and increase throughput

5. A Team Wants to Pivot Mid Stream

New information invalidates the original approach. You say:

A. Pivot if the learning is real, but time box it and control blast radius

B. Pivot carefully because confidence matters, and churn can destroy momentum

C. Pivot early if staying the course is more expensive than switching

D. Pivot only with a crisp alternative and a clear risk reduction story

6. How Do You Measure Engineering Performance?

Your most trusted signals are:

A. Predictable delivery plus stable operations, because reliability is a feature

B. Stakeholder confidence, because alignment reduces thrash and rework

C. Lead time, failure rate, recovery time, and client outcomes

D. A balanced view: delivery, quality, people health, and operational maturity

7. A Senior Engineer Disagrees With You Publicly

In a meeting they challenge your decision. You:

A. Welcome it, but structure the debate so it stays constructive and time boxed

B. Acknowledge it and move it offline so the group stays aligned and productive

C. Explore it openly if it improves the decision and teaches the room

D. Ask for a clear alternative, evidence, and a decision recommendation

8. Planning Means What to You?

When you hear planning you think:

A. Commitments with explicit tradeoffs so delivery is credible

B. A narrative that keeps stakeholders confident and reduces churn

C. Direction, constraints, and fast feedback loops that allow adaptation

D. A tool to reduce chaos while preserving optionality

9. A System Is Known to Be Fragile

It works, but only if nobody touches it. You choose to:

A. Stabilise it with targeted fixes while keeping delivery moving

B. Reduce the surface area of change to keep risk contained

C. Invest in removing fragility because it compounds and taxes every change

D. Contain it, ring fence it, and build a replacement plan

10. How Much Management Do You Want to Hire?

As the organisation grows, your instinct is to:

A. Add leaders only where coordination genuinely increases throughput

B. Add management capacity so engineers can focus and stakeholders are handled

C. Keep it flatter and scale through platforms, clarity, and better interfaces

D. Blend strong technical leads with a small, high leverage management layer

11. Which Reports Do You Value Most?

You feel safest when you see:

A. Delivery and stability metrics with leading indicators

B. Executive summaries that are clear, confident, and action oriented

C. Trend lines tied to outcomes, explained plainly

D. A small set of metrics teams trust and do not game

12. How Do You View Engineers?

Pick the closest description.

A. Skilled professionals who need clarity, constraints, and autonomy

B. Partners in execution who need protection from churn and noise

C. Problem solvers who need context, trust, and feedback

D. Craftspeople who need high standards and space to do quality work

13. What Is the Ideal Career Path for an Engineer?

In your organisation, growth means:

A. Two paths: management for some, deep technical impact for others

B. Broader influence across stakeholders and business outcomes

C. Technical mastery that scales impact without forced management

D. A clear ladder where impact, scope, mentoring, and judgement define seniority

14. A Team Asks for Time to Pay Down Technical Debt

Your reaction is:

A. Support it if it is tied to reliability, speed, or risk reduction

B. Support it if it can be planned without disrupting delivery commitments

C. Support it because debt compounds and removes future options

D. Support it with guardrails and measurable outcomes so it stays bounded

15. Client Centricity Shows Up When?

Client focus matters most when:

A. Tradeoffs are made, because priorities become real

B. Stakeholder stories are written, because narratives drive investment

C. Engineers can explain client impact without being prompted

D. Roadmaps are shaped by behaviour, pain, and retention

16. A New Tool or Platform Is Proposed

You decide based on:

A. Strategic fit and total cost of ownership over time

B. Defensibility and alignment with standards so governance friction is low

C. Operability, durability, and the ability to change safely at speed

D. Team capability and long term maintainability, not the demo

17. Cost Pressure Hits the Organisation

Your instinctive response is to:

A. Remove waste first, then protect investments that buy durability

B. Introduce clear cost controls so spend is disciplined and visible

C. Separate cosmetic savings from structural cost reduction

D. Shift spend toward automation and platforms that reduce run costs

18. Outsourcing and Vendors

Which best matches your stance?

A. Outsource non core execution, keep accountability and architecture internal

B. Use vendors to scale quickly and reduce delivery risk, with strong oversight

C. Outsource execution but keep primitives, recovery, and design authority in house

D. Delegate build and run, and focus on outcomes, governance, and oversight

19. “Tried and Tested” Technology Leadership

When you use this phrase, you mean:

A. Proven patterns reduce risk and free attention for real problems

B. Familiar approaches reduce friction and keep stakeholders comfortable

C. Known tradeoffs are chosen consciously with eyes open

D. Boring technology is stable, scalable, and easier to operate

20. Upwards Management

A senior executive wants certainty you cannot honestly provide yet. You:

A. Provide ranges and scenarios, and explain what will reduce uncertainty next

B. Provide a confident narrative to keep momentum, then refine details underneath

C. Provide the truth plainly, including risk and what is unknown

D. Provide a short answer now, then follow up once validated

Answer Key With Explanations

Each option is scored 🟢 🟡 or 🔴, and the explanation focuses on what that option optimises for over time.

1. A Major Production Outage Happens at 02:00

| Option | Score | Why it is attractive | What it tends to create |

|---|---|---|---|

| A | 🟡 | Decisive leadership feels like responsibility | Can create hero dependency and slow teams over time |

| B | 🔴 | Stakeholder calm is valuable and executives reward it | Optimises for optics first, reality can be delayed |

| C | 🟢 | Impact and enablement keeps experts effective | Faster recovery and better learning loops |

| D | 🟡 | Hands on support can add real leverage | Risks becoming a bottleneck or source of noise |

2. An Engineer Pushes Back on Your Deadline

| Option | Score | Why it is attractive | What it tends to create |

|---|---|---|---|

| A | 🟡 | Keeps the date while sounding pragmatic | Tradeoffs can be silently paid via quality if not explicit |

| B | 🔴 | Preserves confidence and avoids re negotiating | Turns planning into certainty theatre and burns teams |

| C | 🟢 | Makes assumptions and risk visible early | Trust and more credible delivery |

| D | 🟡 | Ownership is motivating and scalable | Can dump accountability if constraints are fixed |

3. A Project Misses Its Delivery Date

| Option | Score | Why it is attractive | What it tends to create |

|---|---|---|---|

| A | 🟡 | Discipline is a clean lever to pull | Process sprawl if root causes are structural |

| B | 🔴 | No surprises is rewarded culturally | Reality gets filtered until it explodes |

| C | 🟢 | Systems thinking matches most real failures | Structural improvement and fewer repeats |

| D | 🟡 | Planning quality does matter | Can become planning theatre if constraints stay unchanged |

4. How Do You View Architecture?

| Option | Score | Why it is attractive | What it tends to create |

|---|---|---|---|

| A | 🟡 | Guardrails feel responsible and scalable | Can drift into paperwork if not grounded in reality |

| B | 🔴 | Clarity and standardisation feel efficient | Freezes evolution and favours defensibility over fitness |

| C | 🟢 | Links architecture directly to cost of change | Real leverage and safer speed |

| D | 🟡 | Enablement is the right intent | Fails if architects are not deeply technical |

5. A Team Wants to Pivot Mid Stream

| Option | Score | Why it is attractive | What it tends to create |

|---|---|---|---|

| A | 🟡 | Controls churn and protects delivery | Can preserve sunk cost bias |

| B | 🔴 | Momentum and confidence are genuinely valuable | Overweights optics and ships the wrong thing on time |

| C | 🟢 | Minimises total cost by switching early | Adaptation and better outcomes |

| D | 🟡 | Forces clarity and reduces random pivots | Can suppress weak but important signals |

6. How Do You Measure Engineering Performance?

| Option | Score | Why it is attractive | What it tends to create |

|---|---|---|---|

| A | 🟡 | Predictability and stability are visible | Can reward activity over outcomes |

| B | 🔴 | Confidence reduces thrash short term | Encourages green reporting and gaming |

| C | 🟢 | Measures flow and outcomes objectively | Sustainable delivery and reliability |

| D | 🟡 | Balance sounds mature | Often dilutes signal and becomes subjective |

7. A Senior Engineer Disagrees With You Publicly

| Option | Score | Why it is attractive | What it tends to create |

|---|---|---|---|

| A | 🟢 | Keeps dissent but prevents chaos | Healthy debate culture |

| B | 🔴 | Prevents confusion and meeting derailment | Teaches people not to surface truth |

| C | 🟢 | Builds learning and better decisions | Psychological safety and stronger reasoning |

| D | 🟡 | Demands rigour and clarity | Can intimidate less senior voices |

8. Planning Means What to You?

| Option | Score | Why it is attractive | What it tends to create |

|---|---|---|---|

| A | 🟡 | Commitments make organisations move | Can lock in fantasy if feedback loops are weak |

| B | 🔴 | Confidence creates momentum | Turns plans into performative certainty |

| C | 🟢 | Feedback loops create accuracy | Real alignment and adaptation |

| D | 🟡 | Reduces chaos without over committing | Can become non committal if overused |

9. A System Is Known to Be Fragile

| Option | Score | Why it is attractive | What it tends to create |

|---|---|---|---|

| A | 🟡 | Keeps delivery moving while improving stability | Can become whack a mole without a strategy |

| B | 🔴 | Containment feels prudent | Hides risk and increases blast radius later |

| C | 🟢 | Pays down the fragility tax | Lower incident rate and faster change |

| D | 🟡 | Replacement feels decisive | Risky if replacement becomes a multi year mirage |

10. How Much Management Do You Want to Hire?

| Option | Score | Why it is attractive | What it tends to create |

|---|---|---|---|

| A | 🟢 | Management only where it adds leverage | Healthy scale |

| B | 🔴 | Protects engineers and satisfies stakeholders | Buffers dysfunction instead of fixing it |

| C | 🟡 | Flat orgs are fast | Needs strong systems and senior teams |

| D | 🟢 | Balanced, high leverage structure | Scales well when execution is strong |

11. Which Reports Do You Value Most?

| Option | Score | Why it is attractive | What it tends to create |

|---|---|---|---|

| A | 🟡 | Metrics feel objective and safe | Can become metric theatre |

| B | 🔴 | Executive clarity is genuinely useful | Encourages filtering and optics management |

| C | 🟢 | Trends reveal truth early | Better decisions with less panic |

| D | 🟢 | Trusted metrics reduce gaming | Honest, high signal conversations |

12. How Do You View Engineers?

| Option | Score | Why it is attractive | What it tends to create |

|---|---|---|---|

| A | 🟢 | Autonomy with constraints scales | Strong ownership |

| B | 🟡 | Protecting focus helps throughput | Can hide underlying churn causes |

| C | 🟢 | Context unlocks better decisions | High quality problem solving |

| D | 🟡 | Standards matter for quality | Can drift into perfectionism |

13. Ideal Career Path for an Engineer

| Option | Score | Why it is attractive | What it tends to create |

|---|---|---|---|

| A | 🟢 | Respects different strengths | Retains top technical talent |

| B | 🟡 | Influence across business can be powerful | Risks valuing politics over craft |

| C | 🟢 | Technical mastery stays rewarded | Durable engineering excellence |

| D | 🟢 | Clarity reduces ambiguity | Strong mentoring and judgement culture |

14. A Team Asks for Time to Pay Down Technical Debt

| Option | Score | Why it is attractive | What it tends to create |

|---|---|---|---|

| A | 🟡 | Ties debt to outcomes | Often underfunded unless protected |

| B | 🔴 | Protects commitments and optics | Debt accumulates invisibly |

| C | 🟢 | Recognises compounding cost | Lower fragility and faster delivery later |

| D | 🟡 | Guardrails prevent abuse | Can cap the work into irrelevance |

15. Client Centricity Shows Up When?

| Option | Score | Why it is attractive | What it tends to create |

|---|---|---|---|

| A | 🟢 | Tradeoffs reveal true priorities | Real client focus |

| B | 🟡 | Stories influence investment | Risks becoming marketing led |

| C | 🟢 | Engineers internalise impact | Culture, not performance |

| D | 🟢 | Behaviour is the truth | Better product decisions |

16. A New Tool or Platform Is Proposed

| Option | Score | Why it is attractive | What it tends to create |

|---|---|---|---|

| A | 🟡 | Strategy and TCO are real | Can miss operability pitfalls |

| B | 🔴 | Governance alignment reduces friction | Defensibility over fitness |

| C | 🟢 | Operability decides long term pain | Fewer outages and safer speed |

| D | 🟡 | Maintainability matters | Can underweight platform primitives |

17. Cost Pressure Hits the Organisation

| Option | Score | Why it is attractive | What it tends to create |

|---|---|---|---|

| A | 🟢 | Cuts waste, protects capability | Strong long term position |

| B | 🔴 | Discipline is rewarded upward | Cost grids that remove options |

| C | 🟢 | Distinguishes real savings from theatre | Structural cost reduction |

| D | 🟡 | Automation reduces run cost | Needs careful sequencing |

18. Outsourcing and Vendors

| Option | Score | Why it is attractive | What it tends to create |

|---|---|---|---|

| A | 🟢 | Focus internal teams on what matters | Clear accountability |

| B | 🔴 | Feels like risk reduction | Capability erosion and vendor dependency |

| C | 🟢 | Outsources labour not thinking | Resilience and adaptability |

| D | 🟡 | Oversight feels like scale | Contract management replacing engineering if overused |

19. Tried and Tested Technology Leadership

| Option | Score | Why it is attractive | What it tends to create |

|---|---|---|---|

| A | 🟢 | Proven patterns reduce risk | Good conservatism |

| B | 🔴 | Familiarity keeps stakeholders calm | Comfort leadership and stagnation |

| C | 🟢 | Conscious tradeoffs are power | Better long term decisions |

| D | 🟢 | Boring tech is operable | Durable platforms when paired with modern practices |

20. Upwards Management

| Option | Score | Why it is attractive | What it tends to create |

|---|---|---|---|

| A | 🟢 | Ranges respect uncertainty | Trust and alignment |

| B | 🔴 | Confidence preserves momentum | Certainty theatre and truth decay |

| C | 🟢 | Plain truth builds credibility | Fewer public explosions |

| D | 🟡 | Speed matters | Risky if the follow up never arrives |

Interpretation

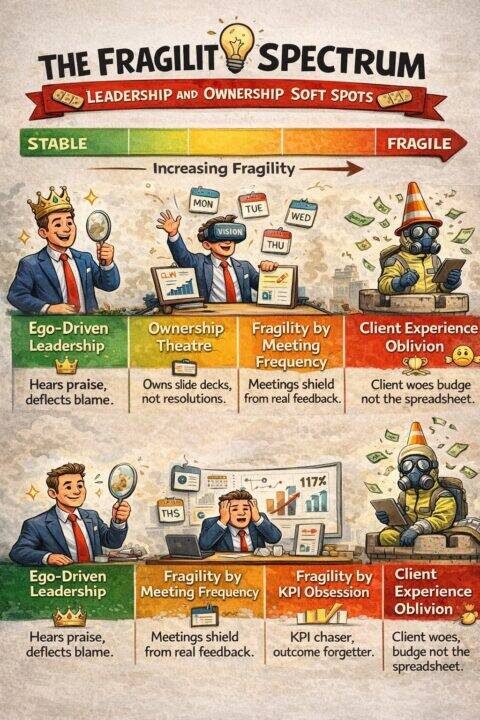

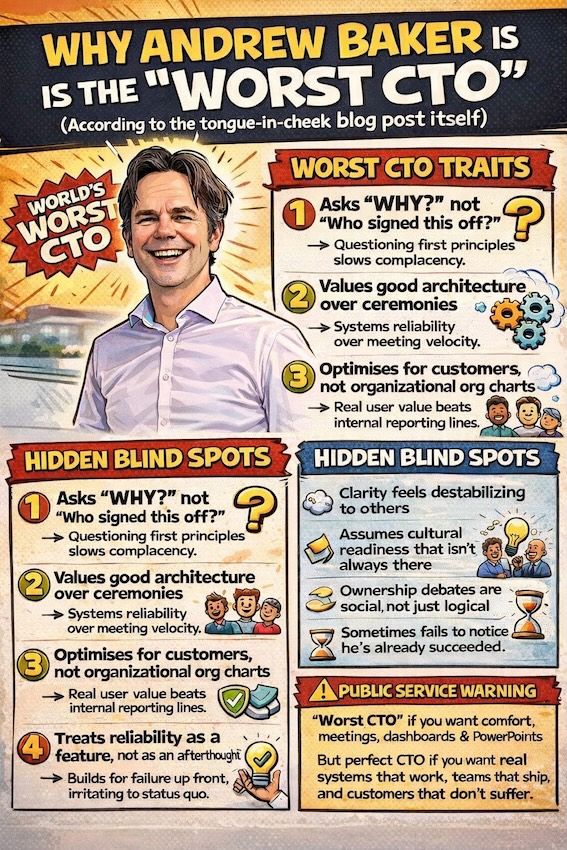

Mostly 🟢 means you are building a durable technology organisation. You can delegate without losing ownership, use vendors without outsourcing thinking, and manage up without lying.

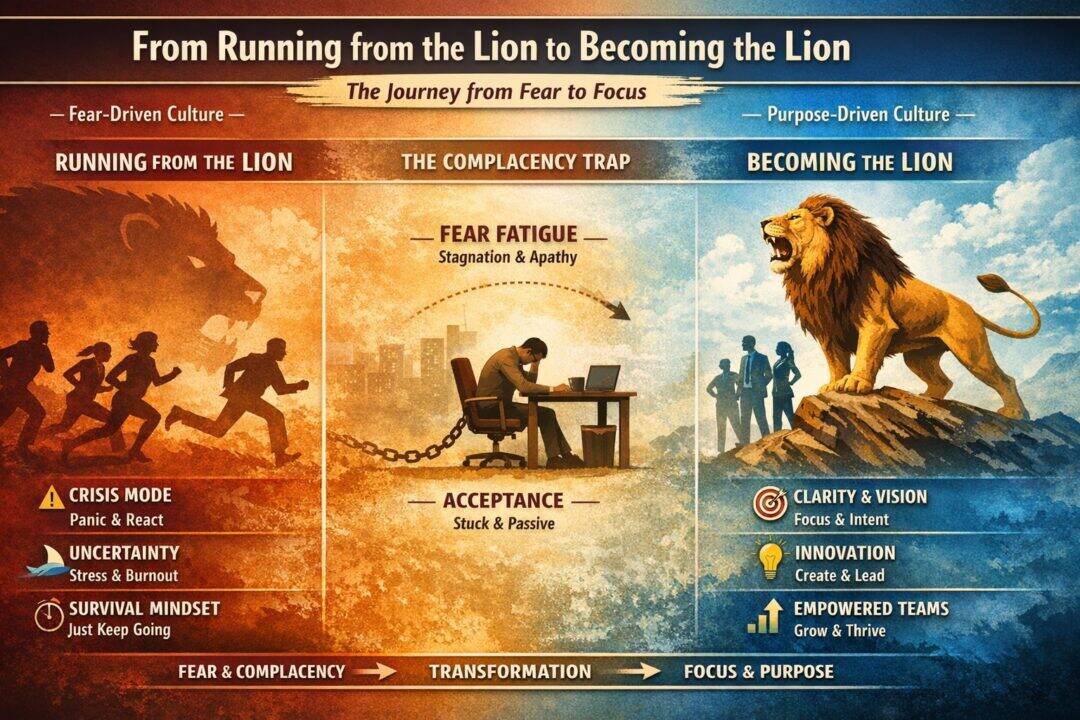

Mostly 🟡 means you have good instincts but pressure pushes you toward convenience. Under stress, your worst defaults will dominate unless you consciously correct them.

Mostly 🔴 means you optimise for optics, comfort, and personal safety. You are likely an upwards management leader. Your organisation will look calm right until it fails loudly.

The most dangerous leaders are not incompetent. They are reassuring.