Below is a quick (am busy) outline on how to automatically stop and start your EC2 instances.

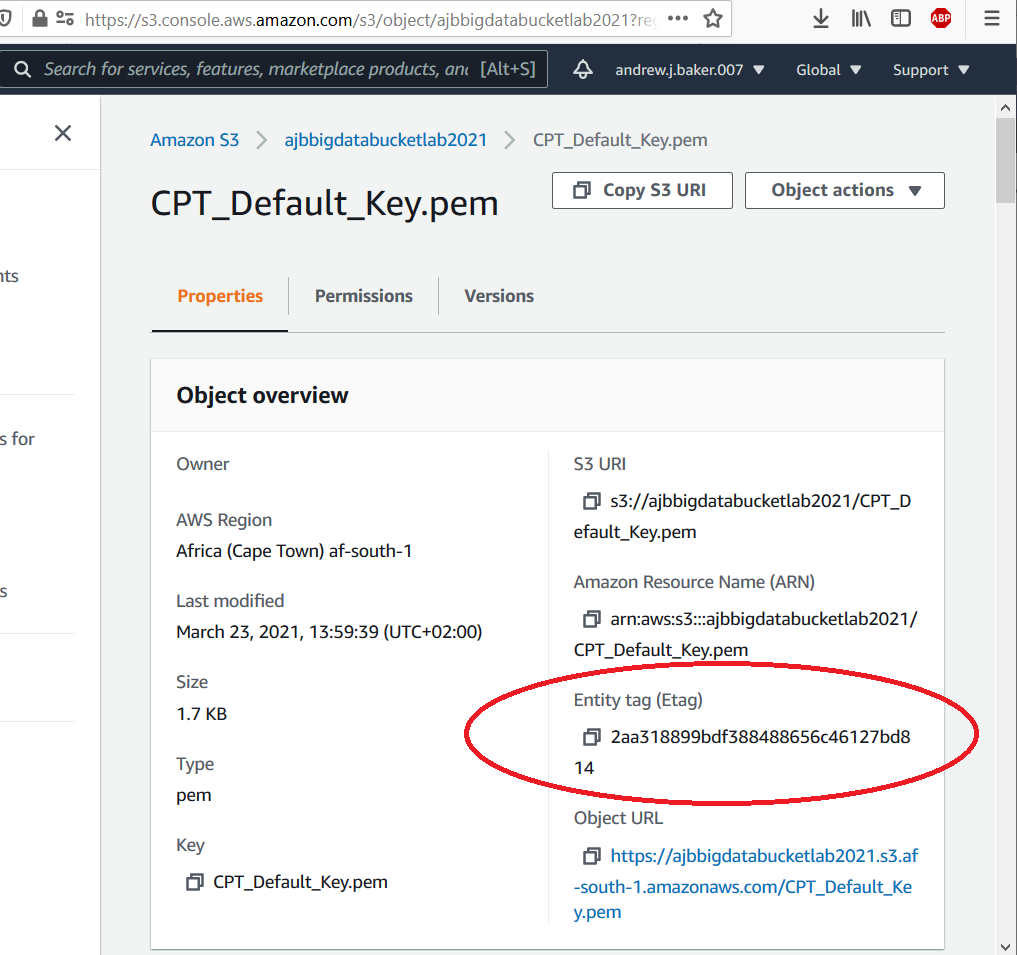

Step 1: Tag your resources

In order to decide which instances stop and start you first need to add an auto-start-stop: Yes tag to all the instances you want to be affected by the start / stop functions. Note: You can use “Resource Groups and Tag Editor” to bulk apply these tags to the resources you want to be affected by the lambda functions you are going to create. See below (click the orange button called “Manage tags of Selected Resources”).

Step 2: Create a new role for our lambda functions

First we need to create the IAM role to run the Lambda functions. Go to IAM and click the “Create Role” button. Then select “AWS Service” from the “Trusted entity options”, and select Lambda from the “Use Cases” options. Then click “Next”, followed by “Create Policy”. To specify the permission, simply Click the JSON button on the right of the screen and enter the below policy (swapping the region and account id for your region and account id):

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"ec2:DescribeInstances",

"ec2:StartInstances",

"ec2:DescribeTags",

"logs:*",

"ec2:DescribeInstanceTypes",

"ec2:StopInstances",

"ec2:DescribeInstanceStatus"

],

"Resource": "arn:aws:ec2:<region>:<accountID>:instance/*",

"Condition": {

"StringEquals": {

"aws:ResourceTag/auto-start-stop": "Yes"

}

}

}

]

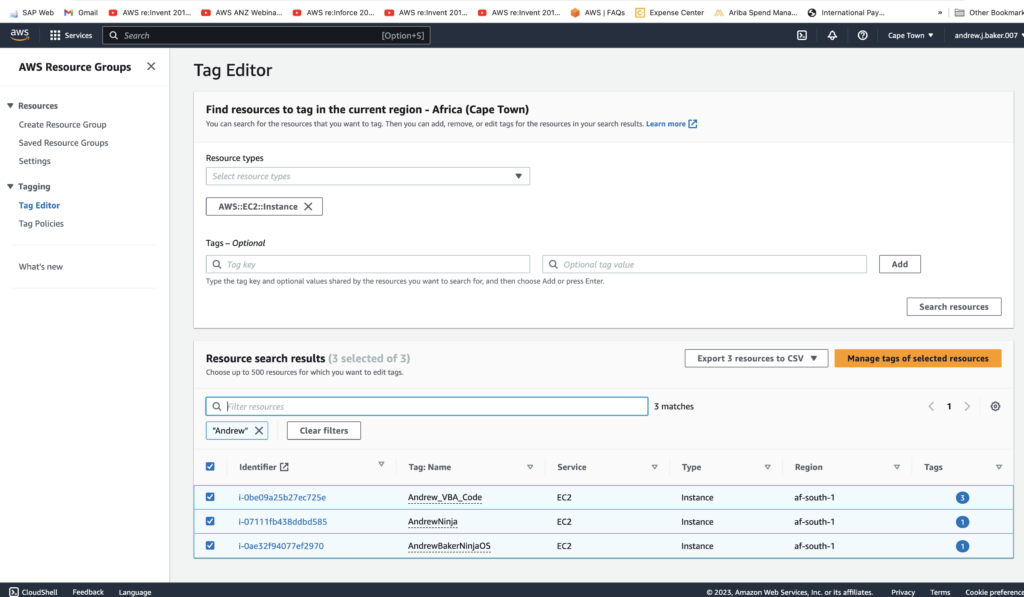

}Hit next and under “Review and create”, save the above policy as ec2-lambda-start-stop by clicking the “Create Policy” button. Next, search for this newly created policy and select it as per below and hit “Next”.

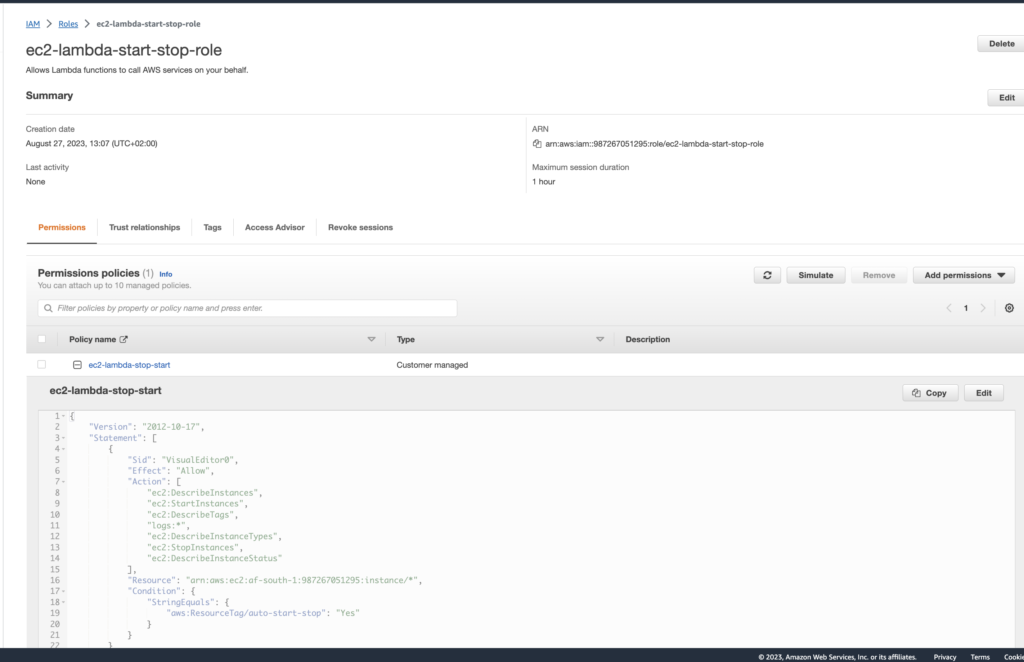

You will now see the “Name, review, and create” screen. Here you simply need to hit “Create Role” after you enter the role name as ec2-lambda-start-stop-role.

Note the policy is restricted to only have access to EC2 instances that contains auto-start-stop: Yes tags (least privileges).

If you want to review your role, this is how it should look. You can see I have filled in my region and account number in the policy:

Step 3: Create Lambda Functions To Start/Stop EC2 Instances

In this section we will create two lambda functions, one to start the instances and the other to stop the instances.

Step 3a: Add the Stop EC2 instance function

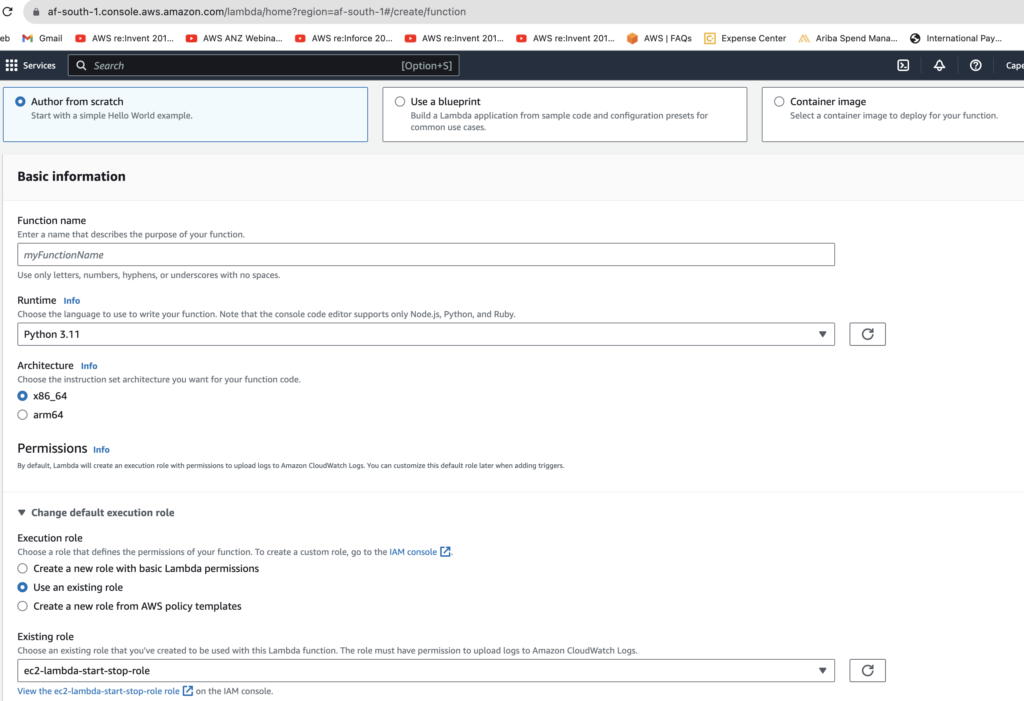

- Goto Lambda console and click on create function

- Create a lambda function with a function name of stop-ec2-instance-lambda, python3.11 runtime, and ec2-lambda-stop-start-role (see image below).

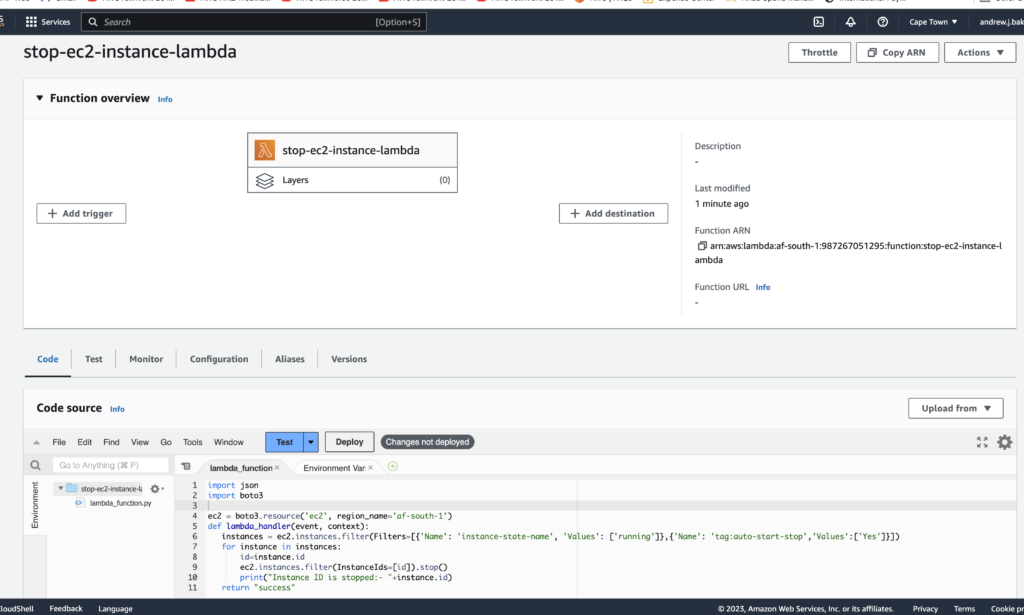

Next add the lamdba stop function and save it as stop-ec2-instance. Note, you will need to change the value of the region_name parameter accordingly.

import json

import boto3

ec2 = boto3.resource('ec2', region_name='af-south-1')

def lambda_handler(event, context):

instances = ec2.instances.filter(Filters=[{'Name': 'instance-state-name', 'Values': ['running']},{'Name': 'tag:auto-start-stop','Values':['Yes']}])

for instance in instances:

id=instance.id

ec2.instances.filter(InstanceIds=[id]).stop()

print("Instance ID is stopped:- "+instance.id)

return "success"This is how your Lambda function should look:

Step 3b: Add the Start EC2 instance function

- Goto Lambda console and click on create function

- Create lambda functions with start-ec2-instance, python3.11 runtime, and ec2-lambda-stop-start-role.

- Then add the below code and save the function as start-ec2-instance-lambda.

Note, you will need to change the value of the region_name parameter accordingly.

import json

import boto3

ec2 = boto3.resource('ec2', region_name='af-south-1')

def lambda_handler(event, context):

instances = ec2.instances.filter(Filters=[{'Name': 'instance-state-name', 'Values': ['stopped']},{'Name': 'tag:auto-start-stop','Values':['Yes']}])

for instance in instances:

id=instance.id

ec2.instances.filter(InstanceIds=[id]).stop()

print("Instance ID is stopped:- "+instance.id)

return "success"4. Summary

If either of the above lambda functions are triggered, they will start or stop your EC2 instances based on the instance state and the value of auto-start-stop tag. To automate this you can simply setup up cron jobs, step functions, AWS Event Bridge, Jenkins etc.