When managing large PostgreSQL tables with frequent updates, vacuum operations become critical for maintaining database health and performance. In this comprehensive guide, we’ll explore vacuum optimization techniques, dive deep into the pg_repack extension, and provide hands-on examples you can run in your own environment.

1. Understanding the Problem

PostgreSQL uses Multi-Version Concurrency Control (MVCC) to handle concurrent transactions. When rows are updated or deleted, PostgreSQL doesn’t immediately remove the old versions—it marks them as dead tuples. Over time, these dead tuples accumulate, leading to:

- Table bloat: Wasted disk space

- Index bloat: Degraded query performance

- Slower sequential scans: More pages to read

- Transaction ID wraparound risks: In extreme cases

The VACUUM process reclaims this space, but for large, heavily-updated tables, standard vacuum strategies often fall short.

1.1 Setting Up Our Test Environment

Let’s create a realistic scenario to understand vacuum optimization. We’ll build a large user activity tracking table that receives constant updates—similar to what you might find in production systems tracking user behaviors, session data, or transaction logs.

1.2 Creating the Test Table

This schema represents a typical high-volume table with multiple indexes for different query patterns:

-- Create our test table

CREATE TABLE user_activities (

id BIGSERIAL PRIMARY KEY,

user_id INTEGER NOT NULL,

activity_type VARCHAR(50) NOT NULL,

activity_data JSONB,

status VARCHAR(20) DEFAULT 'pending',

processed_at TIMESTAMP,

created_at TIMESTAMP DEFAULT NOW(),

updated_at TIMESTAMP DEFAULT NOW(),

metadata TEXT

);

-- Create indexes

CREATE INDEX idx_user_activities_user_id ON user_activities(user_id);

CREATE INDEX idx_user_activities_status ON user_activities(status);

CREATE INDEX idx_user_activities_created_at ON user_activities(created_at);

CREATE INDEX idx_user_activities_processed_at ON user_activities(processed_at)

WHERE processed_at IS NOT NULL;

Example Output:

CREATE TABLE

CREATE INDEX

CREATE INDEX

CREATE INDEX

CREATE INDEX

1.3 Population Script

This function generates realistic test data with varied activity types and statuses to simulate a production environment:

-- Function to generate random activity data

CREATE OR REPLACE FUNCTION generate_user_activities(num_rows INTEGER)

RETURNS void AS $$

DECLARE

batch_size INTEGER := 10000;

batches INTEGER;

i INTEGER;

BEGIN

batches := CEIL(num_rows::NUMERIC / batch_size);

FOR i IN 1..batches LOOP

INSERT INTO user_activities (

user_id,

activity_type,

activity_data,

status,

created_at,

metadata

)

SELECT

(random() * 100000)::INTEGER + 1,

(ARRAY['login', 'purchase', 'view', 'search', 'logout'])[FLOOR(random() * 5 + 1)],

jsonb_build_object(

'ip', '192.168.' || (random() * 255)::INTEGER || '.' || (random() * 255)::INTEGER,

'user_agent', 'Mozilla/5.0',

'session_id', md5(random()::TEXT)

),

(ARRAY['pending', 'processing', 'completed'])[FLOOR(random() * 3 + 1)],

NOW() - (random() * INTERVAL '90 days'),

repeat('x', (random() * 500)::INTEGER + 100)

FROM generate_series(1, LEAST(batch_size, num_rows - (i-1) * batch_size));

RAISE NOTICE 'Inserted batch % of %', i, batches;

END LOOP;

RAISE NOTICE 'Completed inserting % rows', num_rows;

END;

$$ LANGUAGE plpgsql;

-- Populate with 5 million rows (adjust as needed)

SELECT generate_user_activities(5000000);

-- Analyze the table

ANALYZE user_activities;

Example Output:

CREATE FUNCTION

NOTICE: Inserted batch 1 of 500

NOTICE: Inserted batch 2 of 500

NOTICE: Inserted batch 3 of 500

...

NOTICE: Inserted batch 500 of 500

NOTICE: Completed inserting 5000000 rows

generate_user_activities

---------------------------

(1 row)

ANALYZE

1.4 Simulating Heavy Update Load

Understanding bloat requires seeing it in action. This function simulates the update-heavy workload patterns that cause vacuum challenges in production systems:

-- Function to simulate continuous updates

CREATE OR REPLACE FUNCTION simulate_updates(duration_minutes INTEGER)

RETURNS void AS $$

DECLARE

end_time TIMESTAMP;

update_count INTEGER := 0;

BEGIN

end_time := NOW() + (duration_minutes || ' minutes')::INTERVAL;

WHILE NOW() < end_time LOOP

-- Update random rows to 'processing' status

UPDATE user_activities

SET status = 'processing',

updated_at = NOW()

WHERE id IN (

SELECT id FROM user_activities

WHERE status = 'pending'

ORDER BY random()

LIMIT 1000

);

-- Update random rows to 'completed' status

UPDATE user_activities

SET status = 'completed',

processed_at = NOW(),

updated_at = NOW()

WHERE id IN (

SELECT id FROM user_activities

WHERE status = 'processing'

ORDER BY random()

LIMIT 800

);

update_count := update_count + 1800;

IF update_count % 10000 = 0 THEN

RAISE NOTICE 'Processed % updates', update_count;

END IF;

PERFORM pg_sleep(0.1);

END LOOP;

RAISE NOTICE 'Completed % total updates', update_count;

END;

$$ LANGUAGE plpgsql;

-- Run for 5 minutes to generate bloat

-- SELECT simulate_updates(5);

Example Output (when running the simulate_updates function):

NOTICE: Processed 10000 updates

NOTICE: Processed 20000 updates

NOTICE: Processed 30000 updates

...

NOTICE: Completed 54000 total updates

simulate_updates

------------------

(1 row)

1.4 Monitoring Table Health

Before optimizing vacuum operations, you need visibility into your table’s health metrics. These queries provide essential diagnostics for understanding bloat levels and vacuum effectiveness.

1.5 Check Table and Index Bloat

This comprehensive query gives you a snapshot of your table’s overall health, including size metrics and tuple statistics:

-- Comprehensive bloat analysis

SELECT

schemaname,

tablename,

pg_size_pretty(pg_total_relation_size(schemaname||'.'||tablename)) AS total_size,

pg_size_pretty(pg_relation_size(schemaname||'.'||tablename)) AS table_size,

pg_size_pretty(pg_total_relation_size(schemaname||'.'||tablename) -

pg_relation_size(schemaname||'.'||tablename)) AS indexes_size,

n_live_tup,

n_dead_tup,

ROUND(100 * n_dead_tup / NULLIF(n_live_tup + n_dead_tup, 0), 2) AS dead_tuple_percent,

last_vacuum,

last_autovacuum,

last_analyze,

last_autoanalyze

FROM pg_stat_user_tables

WHERE tablename = 'user_activities';

Example Output:

schemaname | tablename | total_size | table_size | indexes_size | n_live_tup | n_dead_tup | dead_tuple_percent | last_vacuum | last_autovacuum | last_analyze | last_autoanalyze

------------+------------------+------------+------------+--------------+------------+------------+--------------------+-------------------------+-------------------------+--------------------------+--------------------------

public | user_activities | 4892 MB | 3214 MB | 1678 MB | 5000000 | 847523 | 14.51 | 2024-11-16 02:15:33.421 | 2024-11-17 08:22:14.832 | 2024-11-16 02:15:45.123 | 2024-11-17 08:22:28.945

(1 row)

1.6 Detailed Bloat Estimation

For a more precise understanding of how much space is wasted, this query calculates bloat based on tuple density:

-- More accurate bloat estimation

WITH table_stats AS (

SELECT

schemaname,

tablename,

n_live_tup,

n_dead_tup,

pg_relation_size(schemaname||'.'||tablename) AS table_bytes,

(n_live_tup + n_dead_tup)::NUMERIC AS total_tuples

FROM pg_stat_user_tables

WHERE tablename = 'user_activities'

),

bloat_calc AS (

SELECT

*,

CASE

WHEN total_tuples > 0 THEN

table_bytes / NULLIF(total_tuples, 0)

ELSE 0

END AS bytes_per_tuple,

CASE

WHEN n_live_tup > 0 THEN

table_bytes * (n_dead_tup::NUMERIC / NULLIF(n_live_tup + n_dead_tup, 0))

ELSE 0

END AS bloat_bytes

FROM table_stats

)

SELECT

tablename,

pg_size_pretty(table_bytes) AS current_size,

pg_size_pretty(bloat_bytes::BIGINT) AS estimated_bloat,

ROUND(100 * bloat_bytes / NULLIF(table_bytes, 0), 2) AS bloat_percent,

n_live_tup,

n_dead_tup

FROM bloat_calc;

Example Output:

tablename | current_size | estimated_bloat | bloat_percent | n_live_tup | n_dead_tup

------------------+--------------+-----------------+---------------+------------+------------

user_activities | 3214 MB | 466 MB | 14.51 | 5000000 | 847523

(1 row)

1.7 Check Current Vacuum Activity

When troubleshooting vacuum issues, it’s crucial to see what’s actually running:

-- Monitor active vacuum operations

SELECT

pid,

datname,

usename,

state,

query_start,

NOW() - query_start AS duration,

query

FROM pg_stat_activity

WHERE query LIKE '%VACUUM%'

AND query NOT LIKE '%pg_stat_activity%';

Example Output:

pid | datname | usename | state | query_start | duration | query

-------+------------+----------+---------+-----------------------------+-----------------+-----------------------------------------------------

12847 | production | postgres | active | 2024-11-17 09:15:22.534829 | 00:03:17.482341 | VACUUM (VERBOSE, ANALYZE) user_activities;

(1 row)

2. Standard Vacuum Strategies

Understanding the different vacuum options is essential for choosing the right approach for your workload. Each vacuum variant serves different purposes and has different performance characteristics.

2.1 Manual VACUUM

These are the basic vacuum commands you’ll use for routine maintenance:

-- Basic vacuum (doesn't lock table)

VACUUM user_activities;

-- Vacuum with analyze

VACUUM ANALYZE user_activities;

-- Verbose output for monitoring

VACUUM (VERBOSE, ANALYZE) user_activities;

-- Aggressive vacuum (more thorough, slower)

VACUUM (FULL, VERBOSE, ANALYZE) user_activities;

Example Output (VACUUM VERBOSE):

INFO: vacuuming "public.user_activities"

INFO: scanned index "user_activities_pkey" to remove 847523 row versions

DETAIL: CPU: user: 2.45 s, system: 0.89 s, elapsed: 12.34 s

INFO: scanned index "idx_user_activities_user_id" to remove 847523 row versions

DETAIL: CPU: user: 1.87 s, system: 0.67 s, elapsed: 9.12 s

INFO: scanned index "idx_user_activities_status" to remove 847523 row versions

DETAIL: CPU: user: 1.92 s, system: 0.71 s, elapsed: 9.45 s

INFO: scanned index "idx_user_activities_created_at" to remove 847523 row versions

DETAIL: CPU: user: 1.88 s, system: 0.68 s, elapsed: 9.23 s

INFO: "user_activities": removed 847523 row versions in 112456 pages

DETAIL: CPU: user: 3.21 s, system: 1.45 s, elapsed: 18.67 s

INFO: "user_activities": found 847523 removable, 5000000 nonremovable row versions in 425678 out of 425678 pages

DETAIL: 0 dead row versions cannot be removed yet, oldest xmin: 123456789

There were 0 unused item identifiers.

Skipped 0 pages due to buffer pins, 0 frozen pages.

0 pages are entirely empty.

CPU: user: 11.33 s, system: 4.40 s, elapsed: 58.81 s.

VACUUM

Note: VACUUM FULL requires an ACCESS EXCLUSIVE lock and rewrites the entire table, making it unsuitable for production during business hours.

2.2 Configuring Autovacuum

Aurora PostgreSQL has autovacuum enabled by default, but tuning these parameters is critical for large, frequently-updated tables:

-- Check current autovacuum settings

SHOW autovacuum_vacuum_threshold;

SHOW autovacuum_vacuum_scale_factor;

SHOW autovacuum_vacuum_cost_delay;

SHOW autovacuum_vacuum_cost_limit;

-- Custom autovacuum settings for our table

ALTER TABLE user_activities SET (

autovacuum_vacuum_threshold = 5000,

autovacuum_vacuum_scale_factor = 0.05, -- More aggressive (default 0.2)

autovacuum_vacuum_cost_delay = 10, -- Faster vacuum (default 20)

autovacuum_analyze_threshold = 2500,

autovacuum_analyze_scale_factor = 0.05

);

-- For extremely busy tables

ALTER TABLE user_activities SET (

autovacuum_vacuum_threshold = 1000,

autovacuum_vacuum_scale_factor = 0.02,

autovacuum_vacuum_cost_delay = 2,

autovacuum_vacuum_cost_limit = 2000, -- Higher I/O limit

autovacuum_naptime = 10 -- Check more frequently

);

Example Output:

autovacuum_vacuum_threshold

-----------------------------

50

(1 row)

autovacuum_vacuum_scale_factor

--------------------------------

0.2

(1 row)

autovacuum_vacuum_cost_delay

------------------------------

20

(1 row)

autovacuum_vacuum_cost_limit

------------------------------

200

(1 row)

ALTER TABLE

ALTER TABLE

These are table-level storage parameters, not server-level GUC (Grand Unified Configuration) parameters, so no restart is needed. These settings take effect immediately without requiring a database or server restart.

Server-Level vs Table-Level

These specific parameters are being set at the table level using ALTER TABLE ... SET, which means they only affect the user_activities table.

However, these same parameters do exist as server-level GUC parameters with slightly different names:

autovacuum_vacuum_threshold (server-level GUC exists)autovacuum_vacuum_scale_factor (server-level GUC exists)autovacuum_vacuum_cost_delay (server-level GUC exists)autovacuum_analyze_threshold (server-level GUC exists)autovacuum_analyze_scale_factor (server-level GUC exists)

When set at the server level in postgresql.conf, those would require a reload (pg_ctl reload or SELECT pg_reload_conf()), but not a full restart.

Your Command

Your ALTER TABLE command is overriding the server-level defaults specifically for the user_activities table, making autovacuum more aggressive for that table. This is a common approach for high-churn tables and applies instantly.

2.3 The pg_repack Extension

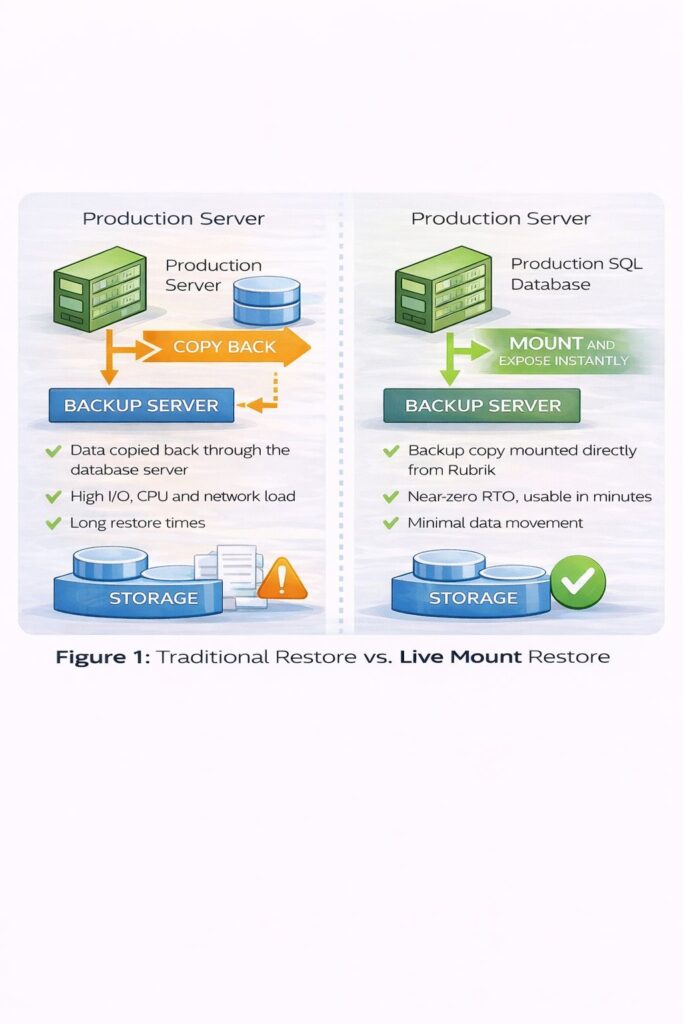

pg_repack is a game-changer for managing large tables with bloat. While VACUUM FULL requires a long-duration exclusive lock that blocks all operations, pg_repack uses an innovative approach that allows the table to remain online and accessible throughout most of the operation.

Understanding pg_repack’s Architecture

pg_repack works fundamentally differently from traditional vacuum operations. Here’s what makes it special:

The Problem with VACUUM FULL:

- Acquires an

ACCESS EXCLUSIVE lock for the entire operation

- Blocks all reads and writes

- For a 100GB table, this could mean hours of downtime

- Single-threaded operation

How pg_repack Solves This:

pg_repack employs a clever multi-stage approach:

- Log Table Creation: Creates a temporary log table to capture changes made during the rebuild

- Online Rebuild: Builds a new, defragmented copy of your table while the original remains fully operational

- Change Capture: Records all INSERT, UPDATE, and DELETE operations in the log table

- Change Replay: Applies the logged changes to the new table

- Atomic Swap: Takes a brief exclusive lock (typically < 1 second) to swap the old and new tables

- Index Rebuild: Rebuilds indexes concurrently on the new table

Key Benefits:

- Minimal Locking: Only a brief lock during the table swap

- Online Operation: Applications continue running normally

- Better Efficiency: Rewrites data in optimal order, improving subsequent query performance

- Parallel Processing: Can use multiple workers for faster completion

- Transaction Safety: All changes are captured and replayed, ensuring data consistency

2.4 Installing pg_repack on Aurora

Setting up pg_repack is straightforward on Aurora PostgreSQL:

-- Check available extensions

SELECT * FROM pg_available_extensions WHERE name = 'pg_repack';

-- Install pg_repack (requires rds_superuser role)

CREATE EXTENSION pg_repack;

-- Verify installation

\dx pg_repack

Example Output:

name | default_version | installed_version | comment

-----------+-----------------+-------------------+----------------------------------------------------------

pg_repack | 1.4.8 | | Reorganize tables in PostgreSQL databases with minimal locks

(1 row)

CREATE EXTENSION

List of installed extensions

Name | Version | Schema | Description

-----------+---------+------------+--------------------------------------------------------------

pg_repack | 1.4.8 | public | Reorganize tables in PostgreSQL databases with minimal locks

(1 row)

2.5 How pg_repack Works (Technical Deep Dive)

Let’s break down the pg_repack process with more detail:

Phase 1: Setup (seconds)

- Creates schema

repack for temporary objects

- Creates a log table

repack.log_XXXXX with triggers

- Installs triggers on source table to capture changes

- Takes a snapshot of current transaction ID

Phase 2: Initial Copy (majority of time)

- Copies all data from original table to

repack.table_XXXXX

- Sorts data optimally (by primary key or specified order)

- Meanwhile, all changes are captured in the log table

- No locks on the original table during this phase

Phase 3: Delta Application (proportional to changes)

- Reads the log table

- Applies INSERT/UPDATE/DELETE operations to new table

- May iterate if many changes occurred during Phase 2

Phase 4: Final Swap (< 1 second typically)

- Acquires

ACCESS EXCLUSIVE lock

- Applies any final logged changes

- Swaps the table definitions atomically

- Releases lock

- Drops old table and log table

Phase 5: Index Rebuild (concurrent)

- Rebuilds all indexes on new table

- Uses

CREATE INDEX CONCURRENTLY to avoid blocking

2.6 Basic pg_repack Usage

From the command line (requires appropriate IAM/credentials for Aurora):

# Basic repack

pg_repack -h your-aurora-cluster.region.rds.amazonaws.com \

-U your_username \

-d your_database \

-t user_activities

# With specific options

pg_repack -h your-aurora-cluster.region.rds.amazonaws.com \

-U your_username \

-d your_database \

-t user_activities \

--no-order \

--no-kill-backend \

-j 4 # Use 4 parallel workers

Example Output:

INFO: repacking table "public.user_activities"

INFO: disabling triggers

INFO: creating temporary table

INFO: copying rows

INFO: 5000000 rows copied

INFO: creating indexes

INFO: creating index "user_activities_pkey"

INFO: creating index "idx_user_activities_user_id"

INFO: creating index "idx_user_activities_status"

INFO: creating index "idx_user_activities_created_at"

INFO: creating index "idx_user_activities_processed_at"

INFO: swapping tables

INFO: applying log

INFO: 12847 log rows applied

INFO: enabling triggers

INFO: dropping old table

INFO: Repacked user_activities (3.2GB -> 2.7GB), 15.6% space reclaimed

NOTICE: TABLE "public.user_activities" repacked successfully

2.7 Advanced pg_repack with SQL Interface

You can also trigger pg_repack from within PostgreSQL:

-- Repack a specific table

SELECT repack.repack_table('public.user_activities');

-- Repack with options

SELECT repack.repack_table(

'public.user_activities',

'REINDEX' -- Rebuild indexes too

);

-- Check pg_repack progress (run in another session)

SELECT

schemaname,

tablename,

pg_size_pretty(pg_total_relation_size(schemaname||'.'||tablename)) AS size,

n_tup_ins,

n_tup_upd,

n_tup_del

FROM pg_stat_user_tables

WHERE tablename LIKE '%repack%';

Example Output:

repack_table

--------------

t

(1 row)

schemaname | tablename | size | n_tup_ins | n_tup_upd | n_tup_del

------------+----------------------------+---------+-----------+-----------+-----------

repack | table_12847 | 2689 MB | 5000000 | 0 | 0

repack | log_12847 | 145 MB | 12847 | 0 | 0

(2 rows)

2.8 Monitoring pg_repack Progress

Real-time monitoring helps you understand how long the operation will take:

-- Create a monitoring function

CREATE OR REPLACE FUNCTION monitor_repack()

RETURNS TABLE (

table_name TEXT,

phase TEXT,

elapsed_time INTERVAL,

table_size TEXT,

estimated_remaining TEXT

) AS $$

BEGIN

RETURN QUERY

SELECT

t.tablename::TEXT,

CASE

WHEN t.tablename LIKE 'repack.table_%' THEN 'Building new table'

WHEN t.tablename LIKE 'repack.log_%' THEN 'Logging changes'

ELSE 'Processing'

END AS phase,

NOW() - ps.query_start AS elapsed,

pg_size_pretty(pg_total_relation_size(t.schemaname||'.'||t.tablename)),

'~' || ROUND(EXTRACT(EPOCH FROM (NOW() - ps.query_start)) * 1.5 / 60) || ' min' AS est_remaining

FROM pg_stat_user_tables t

LEFT JOIN pg_stat_activity ps ON ps.query LIKE '%repack%'

WHERE t.schemaname = 'repack'

OR ps.query LIKE '%repack%';

END;

$$ LANGUAGE plpgsql;

-- Monitor during repack

SELECT * FROM monitor_repack();

Example Output:

CREATE FUNCTION

table_name | phase | elapsed_time | table_size | estimated_remaining

------------------------+--------------------+---------------+------------+---------------------

repack.table_12847 | Building new table | 00:08:23.457 | 2689 MB | ~13 min

repack.log_12847 | Logging changes | 00:08:23.457 | 145 MB | ~13 min

(2 rows)

3.0 Off-Hours Maintenance Script

This comprehensive script is designed to run during low-traffic periods and automatically selects the best vacuum strategy based on bloat levels:

-- ============================================

-- OFF-HOURS TABLE MAINTENANCE SCRIPT

-- Run during maintenance windows

-- ============================================

DO $$

DECLARE

v_start_time TIMESTAMP;

v_table_size BIGINT;

v_dead_tuples BIGINT;

v_bloat_percent NUMERIC;

v_action TEXT;

v_repack_available BOOLEAN;

BEGIN

v_start_time := NOW();

RAISE NOTICE '========================================';

RAISE NOTICE 'Starting maintenance at %', v_start_time;

RAISE NOTICE '========================================';

-- Check if pg_repack is available

SELECT EXISTS (

SELECT 1 FROM pg_extension WHERE extname = 'pg_repack'

) INTO v_repack_available;

-- Gather current statistics

SELECT

pg_relation_size('user_activities'),

n_dead_tup,

ROUND(100.0 * n_dead_tup / NULLIF(n_live_tup + n_dead_tup, 0), 2)

INTO v_table_size, v_dead_tuples, v_bloat_percent

FROM pg_stat_user_tables

WHERE tablename = 'user_activities';

RAISE NOTICE 'Current table size: %', pg_size_pretty(v_table_size);

RAISE NOTICE 'Dead tuples: %', v_dead_tuples;

RAISE NOTICE 'Bloat percentage: %', v_bloat_percent;

-- Decide on action based on bloat level

IF v_bloat_percent > 50 THEN

v_action := 'pg_repack (high bloat)';

IF v_repack_available THEN

RAISE NOTICE 'Bloat > 50%: Executing pg_repack...';

PERFORM repack.repack_table('public.user_activities');

RAISE NOTICE 'pg_repack completed';

ELSE

RAISE NOTICE 'pg_repack not available, running VACUUM FULL...';

RAISE NOTICE 'WARNING: This will lock the table!';

EXECUTE 'VACUUM FULL ANALYZE user_activities';

END IF;

ELSIF v_bloat_percent > 20 THEN

v_action := 'aggressive_vacuum';

RAISE NOTICE 'Bloat 20-50%: Running aggressive VACUUM...';

EXECUTE 'VACUUM (VERBOSE, ANALYZE, FREEZE) user_activities';

ELSIF v_bloat_percent > 10 THEN

v_action := 'standard_vacuum';

RAISE NOTICE 'Bloat 10-20%: Running standard VACUUM...';

EXECUTE 'VACUUM ANALYZE user_activities';

ELSE

v_action := 'analyze_only';

RAISE NOTICE 'Bloat < 10%: Running ANALYZE only...';

EXECUTE 'ANALYZE user_activities';

END IF;

-- Rebuild indexes if needed

RAISE NOTICE 'Checking index health...';

-- Reindex if bloated

IF v_bloat_percent > 30 THEN

RAISE NOTICE 'Rebuilding indexes concurrently...';

EXECUTE 'REINDEX INDEX CONCURRENTLY idx_user_activities_user_id';

EXECUTE 'REINDEX INDEX CONCURRENTLY idx_user_activities_status';

EXECUTE 'REINDEX INDEX CONCURRENTLY idx_user_activities_created_at';

EXECUTE 'REINDEX INDEX CONCURRENTLY idx_user_activities_processed_at';

END IF;

-- Final statistics

SELECT

pg_relation_size('user_activities'),

n_dead_tup,

ROUND(100.0 * n_dead_tup / NULLIF(n_live_tup + n_dead_tup, 0), 2)

INTO v_table_size, v_dead_tuples, v_bloat_percent

FROM pg_stat_user_tables

WHERE tablename = 'user_activities';

RAISE NOTICE '========================================';

RAISE NOTICE 'Maintenance completed in %', NOW() - v_start_time;

RAISE NOTICE 'Action taken: %', v_action;

RAISE NOTICE 'Final table size: %', pg_size_pretty(v_table_size);

RAISE NOTICE 'Final dead tuples: %', v_dead_tuples;

RAISE NOTICE 'Final bloat percentage: %', v_bloat_percent;

RAISE NOTICE '========================================';

END $$;

Example Output:

NOTICE: ========================================

NOTICE: Starting maintenance at 2024-11-17 02:00:00.123456

NOTICE: ========================================

NOTICE: Current table size: 3214 MB

NOTICE: Dead tuples: 847523

NOTICE: Bloat percentage: 14.51

NOTICE: Bloat 10-20%: Running standard VACUUM...

INFO: vacuuming "public.user_activities"

INFO: "user_activities": removed 847523 row versions in 112456 pages

INFO: "user_activities": found 847523 removable, 5000000 nonremovable row versions

NOTICE: Checking index health...

NOTICE: ========================================

NOTICE: Maintenance completed in 00:01:23.847293

NOTICE: Action taken: standard_vacuum

NOTICE: Final table size: 2987 MB

NOTICE: Final dead tuples: 0

NOTICE: Final bloat percentage: 0.00

NOTICE: ========================================

DO

3.1 Scheduling the Maintenance Script

For Aurora PostgreSQL, you can use AWS EventBridge with Lambda to schedule this:

# Lambda function to execute maintenance

import boto3

import psycopg2

import os

def lambda_handler(event, context):

conn = psycopg2.connect(

host=os.environ['DB_HOST'],

database=os.environ['DB_NAME'],

user=os.environ['DB_USER'],

password=os.environ['DB_PASSWORD']

)

with conn.cursor() as cur:

# Read and execute the maintenance script

with open('maintenance_script.sql', 'r') as f:

cur.execute(f.read())

conn.commit()

conn.close()

return {'statusCode': 200, 'body': 'Maintenance completed'}

Or use a cron job on an EC2 instance:

# Add to crontab for 2 AM daily maintenance

0 2 * * * psql -h your-aurora-cluster.region.rds.amazonaws.com \

-U your_user \

-d your_db \

-f /path/to/maintenance_script.sql \

>> /var/log/postgres_maintenance.log 2>&1

4.0 Memory Configuration for Vacuum Operations

While tuning autovacuum thresholds and cost-based settings is crucial, proper memory allocation can dramatically improve vacuum performance, especially for large tables. Two key parameters control how much memory vacuum operations can use.

Understanding Vacuum Memory Parameters

maintenance_work_mem: This parameter controls the maximum amount of memory used by maintenance operations including VACUUM, CREATE INDEX, and ALTER TABLE ADD FOREIGN KEY. The default is typically 64MB, which is often insufficient for large tables.

-- Check current setting

SHOW maintenance_work_mem;

-- Set globally (requires reload)

ALTER SYSTEM SET maintenance_work_mem = '2GB';

SELECT pg_reload_conf();

-- Or set per session for manual vacuum

SET maintenance_work_mem = '4GB';

VACUUM VERBOSE user_activities;

autovacuum_work_mem: Introduced in PostgreSQL 9.4, this parameter specifically controls memory for autovacuum workers. If set to -1 (default), it falls back to maintenance_work_mem. Setting this separately allows you to allocate different memory limits for automatic vs. manual vacuum operations.

-- Check current setting

SHOW autovacuum_work_mem;

-- Set globally (requires reload)

ALTER SYSTEM SET autovacuum_work_mem = '1GB';

SELECT pg_reload_conf();

During vacuum, PostgreSQL maintains an array of dead tuple identifiers (TIDs) in memory. When this array fills up, vacuum must:

- Stop scanning the table

- Scan and clean all indexes

- Remove the dead tuples from the heap

- Continue scanning for more dead tuples

This process repeats until the entire table is processed. More memory means:

- Fewer index scan passes (expensive operation)

- Better vacuum throughput

- Reduced overall vacuum time

4.2 Memory Sizing Guidelines

Calculate required memory: Each dead tuple requires 6 bytes of memory. For a table with many dead tuples:

required_memory = (dead_tuples × 6 bytes) + overhead

Best practices:

- Small instances: Set

maintenance_work_mem to 256MB-512MB

- Medium instances: 1GB-2GB for

maintenance_work_mem, 512MB-1GB for autovacuum_work_mem

- Large instances: 4GB-8GB for

maintenance_work_mem, 1GB-2GB per autovacuum worker

- Critical consideration: Remember

autovacuum_work_mem is allocated per worker, so with autovacuum_max_workers = 5 and autovacuum_work_mem = 2GB, you could use up to 10GB total

4.3 Aurora-Specific Considerations

For Amazon Aurora PostgreSQL:

- Aurora uses shared storage, so vacuum doesn’t rewrite data to new storage

- Memory settings still impact performance of index cleaning phases

- Monitor using CloudWatch metric

FreeableMemory to ensure you’re not over-allocating

- Consider Aurora’s instance size when setting these parameters

-- Conservative Aurora settings for db.r5.2xlarge (64GB RAM)

ALTER SYSTEM SET maintenance_work_mem = '2GB';

ALTER SYSTEM SET autovacuum_work_mem = '1GB';

ALTER SYSTEM SET autovacuum_max_workers = 3;

SELECT pg_reload_conf();

4.4 Monitoring Memory Usage

Check if vacuum operations are hitting memory limits:

-- Check for multiple index scan passes (indicates insufficient memory)

SELECT

schemaname,

relname,

last_vacuum,

n_dead_tup,

round(pg_table_size(schemaname||'.'||relname)::numeric / (1024^3), 2) as table_size_gb,

round((n_dead_tup * 6) / (1024^2), 2) as min_required_mem_mb

FROM pg_stat_user_tables

WHERE n_dead_tup > 0

ORDER BY n_dead_tup DESC

LIMIT 20;

When running manual vacuum with VERBOSE, watch for messages like:

INFO: index "user_activities_pkey" now contains 1000000 row versions in 2745 pages

INFO: "user_activities": removed 500000 row versions in 12500 pages

If you see multiple cycles of “removed X row versions”, your maintenance_work_mem may be too small.

4.4 Recommended Configuration Workflow

- Assess current state: Run the estimation script below to calculate memory requirements

- Set conservative values: Start with moderate memory allocations

- Monitor performance: Watch vacuum duration and CloudWatch metrics

- Iterate: Gradually increase memory if vacuum is still slow and memory is available

- Balance resources: Ensure vacuum memory doesn’t starve your application connections

4.7 SQL Script: Estimate Required Vacuum Memory

This script analyzes your largest tables and estimates the optimal maintenance_work_mem and autovacuum_work_mem settings:

-- Vacuum Memory Requirement Estimator

-- This script analyzes table sizes and estimates memory needed for efficient vacuum operations

WITH table_stats AS (

SELECT

schemaname,

tablename,

pg_total_relation_size(schemaname||'.'||tablename) as total_size_bytes,

pg_table_size(schemaname||'.'||tablename) as table_size_bytes,

pg_indexes_size(schemaname||'.'||tablename) as indexes_size_bytes,

n_live_tup,

n_dead_tup,

last_vacuum,

last_autovacuum,

-- Estimate potential dead tuples based on table size and typical churn

GREATEST(n_dead_tup, n_live_tup * 0.20) as estimated_max_dead_tup

FROM pg_stat_user_tables

WHERE schemaname NOT IN ('pg_catalog', 'information_schema')

),

memory_calculations AS (

SELECT

schemaname,

tablename,

-- Size formatting

pg_size_pretty(total_size_bytes) as total_size,

pg_size_pretty(table_size_bytes) as table_size,

pg_size_pretty(indexes_size_bytes) as indexes_size,

-- Tuple counts

n_live_tup,

n_dead_tup,

estimated_max_dead_tup::bigint,

-- Memory calculations (6 bytes per dead tuple TID)

round((estimated_max_dead_tup * 6) / (1024.0 * 1024.0), 2) as min_memory_mb,

round((estimated_max_dead_tup * 6 * 1.2) / (1024.0 * 1024.0), 2) as recommended_memory_mb,

-- Vacuum history

last_vacuum,

last_autovacuum,

-- Number of index scan passes with current maintenance_work_mem

CASE

WHEN estimated_max_dead_tup = 0 THEN 0

ELSE CEIL(

(estimated_max_dead_tup * 6.0) /

(SELECT setting::bigint * 1024 FROM pg_settings WHERE name = 'maintenance_work_mem')

)::integer

END as estimated_index_scans

FROM table_stats

),

system_config AS (

SELECT

name,

setting,

unit,

CASE

WHEN unit = 'kB' THEN (setting::bigint / 1024)::text || ' MB'

WHEN unit = 'MB' THEN setting || ' MB'

WHEN unit = 'GB' THEN setting || ' GB'

ELSE setting || COALESCE(' ' || unit, '')

END as formatted_value

FROM pg_settings

WHERE name IN ('maintenance_work_mem', 'autovacuum_work_mem', 'autovacuum_max_workers')

)

SELECT

'=== CURRENT MEMORY CONFIGURATION ===' as info,

NULL::text as schemaname,

NULL::text as tablename,

NULL::text as total_size,

NULL::bigint as n_live_tup,

NULL::bigint as n_dead_tup,

NULL::bigint as estimated_max_dead_tup,

NULL::numeric as min_memory_mb,

NULL::numeric as recommended_memory_mb,

NULL::integer as estimated_index_scans,

NULL::timestamp as last_vacuum,

NULL::timestamp as last_autovacuum

UNION ALL

SELECT

name || ': ' || formatted_value,

NULL, NULL, NULL, NULL, NULL, NULL, NULL, NULL, NULL, NULL, NULL

FROM system_config

UNION ALL

SELECT

'=== TOP TABLES BY SIZE (Memory Requirements) ===' as info,

NULL, NULL, NULL, NULL, NULL, NULL, NULL, NULL, NULL, NULL, NULL

UNION ALL

SELECT

CASE

WHEN recommended_memory_mb > 1024 THEN 'Warn: ' || tablename

ELSE tablename

END as info,

schemaname,

tablename,

total_size,

n_live_tup,

n_dead_tup,

estimated_max_dead_tup,

min_memory_mb,

recommended_memory_mb,

estimated_index_scans,

last_vacuum,

last_autovacuum

FROM memory_calculations

WHERE total_size_bytes > 1048576 -- Only tables > 1MB

ORDER BY total_size_bytes DESC

LIMIT 25;

-- Summary recommendations

SELECT

'=== RECOMMENDATIONS ===' as section,

'' as recommendation;

SELECT

'Based on largest tables:' as section,

'Suggested maintenance_work_mem: ' ||

CASE

WHEN MAX(recommended_memory_mb) < 256 THEN '256 MB (small DB)'

WHEN MAX(recommended_memory_mb) < 1024 THEN CEIL(MAX(recommended_memory_mb) / 256) * 256 || ' MB'

WHEN MAX(recommended_memory_mb) < 4096 THEN CEIL(MAX(recommended_memory_mb) / 512) * 512 || ' MB'

ELSE LEAST(8192, CEIL(MAX(recommended_memory_mb) / 1024) * 1024) || ' MB (capped at 8GB)'

END as recommendation

FROM memory_calculations;

SELECT

'For autovacuum workers:' as section,

'Suggested autovacuum_work_mem: ' ||

CASE

WHEN MAX(recommended_memory_mb) < 512 THEN '256 MB'

WHEN MAX(recommended_memory_mb) < 2048 THEN '512 MB to 1 GB'

ELSE '1 GB to 2 GB per worker'

END ||

' (remember: allocated per worker!)' as recommendation

FROM memory_calculations;

SELECT

'Tables requiring attention:' as section,

COUNT(*)::text || ' tables need more than 1GB for optimal vacuum' as recommendation

FROM memory_calculations

WHERE recommended_memory_mb > 1024;

SELECT

'Memory efficiency:' as section,

CASE

WHEN COUNT(*) = 0 THEN 'All tables can vacuum efficiently with current settings'

ELSE COUNT(*)::text || ' tables will require multiple index scans with current settings'

END as recommendation

FROM memory_calculations

WHERE estimated_index_scans > 1;

4.8 Using the Estimation Script

- Run the script against your database to see current configuration and requirements

- Review the output focusing on:

- Tables with

estimated_index_scans > 1 (need more memory)

recommended_memory_mb for your largest tables- Tables marked with “Warn: ” (require > 1GB memory)

- Apply recommendations using the summary output

- Monitor vacuum performance after changes

Example output interpretation:

tablename | recommended_memory_mb | estimated_index_scans

-----------------------|----------------------|----------------------

user_activities | 1843.20 | 2

orders | 512.45 | 1

products | 128.30 | 1

This indicates user_activities needs ~1.8GB for single-pass vacuum. If maintenance_work_mem = 1GB, vacuum will scan indexes twice, which is inefficient.

Pro tip: For tables that require excessive memory (>4GB), consider using pg_repack instead of relying solely on vacuum, or vacuum during maintenance windows with temporarily increased maintenance_work_mem.

5.0 Parallel Vacuuming

Starting with PostgreSQL 13, vacuum operations can leverage multiple CPU cores through parallel processing, dramatically reducing vacuum time for large tables with multiple indexes. This feature is particularly valuable for Aurora PostgreSQL environments where large tables can take hours to vacuum serially.

5.1 How Parallel Vacuum Works

Parallel vacuum speeds up the index cleanup phase—often the most time-consuming part of the vacuum process. When enabled:

- The leader process scans the table heap and collects dead tuple identifiers

- Multiple parallel workers simultaneously clean indexes

- The leader process removes dead tuples from the heap

- The cycle repeats until the table is fully vacuumed

Key point: Only the index cleanup phase is parallelized. Table scanning and heap cleanup remain single-threaded, but since index cleanup often dominates vacuum time (especially for tables with many indexes), the speedup can be substantial.

5.2 Enabling Parallel Vacuum

PostgreSQL automatically uses parallel vacuum when:

- The table has at least 2 indexes

min_parallel_index_scan_size threshold is met (default 512KB per index)- Sufficient parallel workers are available

Configuration Parameters

-- Check current settings

SHOW max_parallel_maintenance_workers; -- Default: 2

SHOW min_parallel_index_scan_size; -- Default: 512kB

SHOW max_parallel_workers; -- Overall parallel worker limit

-- Adjust for better parallelism (requires reload)

ALTER SYSTEM SET max_parallel_maintenance_workers = 4;

ALTER SYSTEM SET min_parallel_index_scan_size = '256kB';

SELECT pg_reload_conf();

Parameter descriptions:

max_parallel_maintenance_workers: Maximum workers for maintenance operations (VACUUM, CREATE INDEX). Limited by max_parallel_workersmin_parallel_index_scan_size: Minimum index size to consider for parallel processingmax_parallel_workers: System-wide limit for all parallel operations

5.3 Per-Table Parallel Configuration

For specific large tables, you can control parallel vacuum behavior:

-- Enable parallel vacuum with specific worker count

ALTER TABLE user_activities SET (parallel_workers = 4);

-- Disable parallel vacuum for a specific table

ALTER TABLE sensitive_table SET (parallel_workers = 0);

-- Check table-level settings

SELECT

schemaname,

tablename,

reloptions

FROM pg_tables

WHERE tablename = 'user_activities';

5.6 Manual Vacuum with Parallel Workers

When running manual vacuum, you can specify the degree of parallelism:

-- Vacuum with explicit parallel workers

VACUUM (PARALLEL 4, VERBOSE) user_activities;

-- Vacuum with parallel disabled

VACUUM (PARALLEL 0, VERBOSE) user_activities;

-- Let PostgreSQL decide (based on table and system settings)

VACUUM (VERBOSE) user_activities;

5.7 Monitoring Parallel Vacuum

Check if vacuum is using parallel workers:

-- View active vacuum operations and their parallel workers

SELECT

pid,

datname,

usename,

query_start,

state,

wait_event_type,

wait_event,

query

FROM pg_stat_activity

WHERE query LIKE '%VACUUM%'

OR backend_type LIKE '%parallel worker%'

ORDER BY query_start;

Watch for “parallel worker” processes that accompany the main vacuum process.

5.8 Performance Testing

Compare vacuum performance with and without parallelism:

-- Create test table with multiple indexes

CREATE TABLE vacuum_test AS

SELECT * FROM user_activities LIMIT 1000000;

CREATE INDEX idx1 ON vacuum_test(user_id);

CREATE INDEX idx2 ON vacuum_test(activity_type);

CREATE INDEX idx3 ON vacuum_test(created_at);

CREATE INDEX idx4 ON vacuum_test(session_id);

-- Generate some dead tuples

UPDATE vacuum_test SET activity_type = 'modified' WHERE random() < 0.2;

-- Test serial vacuum

\timing on

VACUUM (PARALLEL 0, VERBOSE) vacuum_test;

-- Note the time

-- Test parallel vacuum

VACUUM (PARALLEL 4, VERBOSE) vacuum_test;

-- Note the time and compare

5.9 Aurora-Specific Considerations

When using parallel vacuum with Aurora PostgreSQL:

Instance sizing: Ensure your instance has sufficient vCPUs for parallel operations

- db.r5.large (2 vCPUs):

max_parallel_maintenance_workers = 2

- db.r5.xlarge (4 vCPUs):

max_parallel_maintenance_workers = 2-3

- db.r5.2xlarge (8 vCPUs):

max_parallel_maintenance_workers = 4

- db.r5.4xlarge+ (16+ vCPUs):

max_parallel_maintenance_workers = 4-6

Memory considerations: Each parallel worker requires its own memory allocation from maintenance_work_mem (or autovacuum_work_mem for autovacuum). With 4 workers and maintenance_work_mem = 2GB, you could use up to 8GB total.

-- Conservative Aurora parallel vacuum configuration

-- For db.r5.2xlarge (8 vCPU, 64GB RAM)

ALTER SYSTEM SET max_parallel_maintenance_workers = 4;

ALTER SYSTEM SET maintenance_work_mem = '1GB'; -- 4GB total with 4 workers

ALTER SYSTEM SET max_worker_processes = 16; -- Ensure worker pool is sufficient

SELECT pg_reload_conf();

Reader endpoint impact: Parallel vacuum on the writer can increase replication lag to reader endpoints. Monitor ReplicaLag CloudWatch metric during parallel vacuum operations.

5.10 When Parallel Vacuum Helps Most

Parallel vacuum provides the biggest benefit when:

Tables have 4+ indexes – More indexes = more parallelizable work

Indexes are large (>1GB each) – Meets min_parallel_index_scan_size threshold

Sufficient CPU cores available – Won’t compete with application queries

I/O isn’t the bottleneck – Aurora’s storage architecture handles concurrent I/O well

Parallel vacuum helps less when:

Tables have only 1-2 small indexes – Limited parallelizable work

CPU is already saturated – Parallel workers compete with application

During peak traffic hours – Better to run with fewer workers to avoid contention

5.12 Autovacuum and Parallelism

Autovacuum workers can also use parallel processing (PostgreSQL 13+):

-- Enable parallel autovacuum for specific table

ALTER TABLE user_activities SET (

parallel_workers = 3,

autovacuum_vacuum_scale_factor = 0.05,

autovacuum_vacuum_threshold = 5000

);

However, be cautious with parallel autovacuum on production systems:

- Each autovacuum worker can spawn additional parallel workers

- With

autovacuum_max_workers = 3 and parallel_workers = 4, you could have 12 total workers

- This can quickly exhaust

max_worker_processes and max_connections

Recommendation: Start with parallel_workers = 2 for autovacuum, monitor resource usage, then adjust.

5.13 Practical Example: Optimizing a Large Table

-- Scenario: 100GB table with 6 indexes taking 2 hours to vacuum

-- Step 1: Check current configuration

SHOW max_parallel_maintenance_workers; -- Returns 2

-- Step 2: Analyze the table

SELECT

schemaname,

tablename,

pg_size_pretty(pg_total_relation_size(schemaname||'.'||tablename)) as total_size,

pg_size_pretty(pg_indexes_size(schemaname||'.'||tablename)) as indexes_size,

(SELECT count(*) FROM pg_indexes WHERE tablename = 'user_activities') as num_indexes

FROM pg_stat_user_tables

WHERE tablename = 'user_activities';

-- Result: 100GB total, 45GB indexes, 6 indexes

-- Step 3: Enable parallel vacuum

ALTER TABLE user_activities SET (parallel_workers = 4);

-- Step 4: Increase maintenance workers (if needed)

ALTER SYSTEM SET max_parallel_maintenance_workers = 4;

SELECT pg_reload_conf();

-- Step 5: Run vacuum with timing

\timing on

VACUUM (VERBOSE) user_activities;

-- Expected result: Vacuum time reduced from 2 hours to 45-60 minutes

5.14 Troubleshooting Parallel Vacuum

Problem: Vacuum not using parallel workers

-- Check if indexes meet size threshold

SELECT

schemaname,

tablename,

indexname,

pg_size_pretty(pg_relation_size(indexrelid)) as index_size

FROM pg_stat_user_indexes

WHERE tablename = 'user_activities'

ORDER BY pg_relation_size(indexrelid) DESC;

-- If indexes < 512KB, lower the threshold

ALTER SYSTEM SET min_parallel_index_scan_size = '128kB';

SELECT pg_reload_conf();

Problem: Running out of worker processes

-- Check worker process limits

SHOW max_worker_processes; -- Total worker pool

SHOW max_parallel_workers; -- Max parallel workers allowed

-- Increase if needed

ALTER SYSTEM SET max_worker_processes = 16;

ALTER SYSTEM SET max_parallel_workers = 8;

-- Requires restart for max_worker_processes

Problem: High memory usage during parallel vacuum

-- Reduce per-worker memory allocation

SET maintenance_work_mem = '512MB'; -- Each worker gets this amount

VACUUM (PARALLEL 4) user_activities; -- 2GB total

5.15 Best Practices For Parallelisation

- Baseline first: Measure vacuum time before enabling parallel processing

- Match CPU availability: Set

max_parallel_maintenance_workers based on vCPUs and workload

- Consider memory:

maintenance_work_mem × parallel_workers = total memory usage

- Start conservative: Begin with 2-3 workers, increase based on results

- Monitor during peak: Watch CPU and memory metrics when parallel vacuum runs

- Test index threshold: Lower

min_parallel_index_scan_size if indexes are smaller

- Schedule strategically: Use parallel vacuum during maintenance windows for predictable performance

- Aurora readers: Monitor replication lag impact on read replicas

Parallel vacuum is a powerful tool for managing large tables in Aurora PostgreSQL, but it requires careful configuration to balance vacuum speed against resource consumption. When properly tuned, it can reduce vacuum time by 50-70% for index-heavy tables.

6.0 Optimizing Aurora PostgreSQL Vacuums with TOAST Table Parameters

What is TOAST?

TOAST (The Oversized-Attribute Storage Technique) is PostgreSQL’s mechanism for handling data that exceeds the standard 8KB page size limit. When you store large text fields, JSON documents, bytea columns, or other substantial data types, PostgreSQL automatically moves this data out of the main table into a separate TOAST table. This keeps the main table pages compact and efficient for scanning, while the oversized data is stored separately and retrieved only when needed.

Every table with potentially large columns has an associated TOAST table (named pg_toast.pg_toast_<oid>) that operates behind the scenes. While this separation improves query performance on the main table, TOAST tables can accumulate dead tuples from updates and deletes just like regular tables, requiring their own vacuum maintenance.

6.1 Understanding TOAST Autovacuum Parameters

TOAST tables can be tuned independently from their parent tables using specific parameters. Here are the key options and their recommended values:

toast.autovacuum_vacuum_cost_delay

- Default: Inherits from

autovacuum_vacuum_cost_delay (typically 2ms in Aurora)

- Recommended:

0 for high-throughput systems

- Purpose: Controls the delay between vacuum operations to throttle I/O impact

- Effect: Setting to

0 removes throttling, allowing vacuums to complete faster at the cost of higher instantaneous I/O

ALTER TABLE your_large_table SET (toast.autovacuum_vacuum_cost_delay = 0);

toast.autovacuum_vacuum_threshold

- Default: 50 tuples

- Recommended:

1000-5000 for large, frequently updated tables

- Purpose: Minimum number of dead tuples before triggering an autovacuum

- Effect: Higher values reduce vacuum frequency but may allow more bloat

ALTER TABLE your_large_table SET (toast.autovacuum_vacuum_threshold = 2000);

toast.autovacuum_vacuum_scale_factor

- Default: 0.2 (20% of table size)

- Recommended:

0.05-0.1 for very large tables, 0.2-0.3 for smaller tables

- Purpose: Percentage of table size that, when combined with threshold, triggers autovacuum

- Effect: Lower values mean more frequent vacuums, preventing excessive bloat

ALTER TABLE your_large_table SET (toast.autovacuum_vacuum_scale_factor = 0.1);

toast.autovacuum_vacuum_cost_limit

- Default: Inherits from

autovacuum_vacuum_cost_limit (typically 200 in Aurora)

- Recommended:

2000-4000 for aggressive cleanup

- Purpose: Maximum “cost” budget before vacuum process sleeps

- Effect: Higher values allow more work per cycle before throttling kicks in

ALTER TABLE your_large_table SET (toast.autovacuum_vacuum_cost_limit = 3000);

6.2 Practical Example

For a large table with frequent updates to text or JSON columns in Aurora PostgreSQL:

-- Optimize TOAST table for aggressive, fast vacuuming

ALTER TABLE user_profiles SET (

toast.autovacuum_vacuum_cost_delay = 0,

toast.autovacuum_vacuum_threshold = 2000,

toast.autovacuum_vacuum_scale_factor = 0.05,

toast.autovacuum_vacuum_cost_limit = 3000

);

This configuration ensures TOAST tables are vacuumed frequently and quickly, preventing bloat from degrading performance while leveraging Aurora’s optimized storage layer. Monitor your vacuum activity using pg_stat_user_tables and adjust these parameters based on your workload’s specific characteristics.

7.0 “No Regrets” Optimizations for Mission-Critical Large Tables

When you have mission-critical large tables and sufficient infrastructure to scale memory and CPU, these optimizations will deliver immediate performance improvements without significant trade-offs:

7.1 Increase Maintenance Memory Allocation

Set generous memory limits to ensure vacuum operations complete in a single index scan pass:

-- For large instances with adequate RAM

ALTER SYSTEM SET maintenance_work_mem = '4GB';

ALTER SYSTEM SET autovacuum_work_mem = '2GB';

SELECT pg_reload_conf();

Why this works: Each dead tuple requires 6 bytes in memory. Insufficient memory forces multiple expensive index scan passes. With adequate memory, vacuum completes faster and more efficiently.

Impact: Reduces vacuum time by 40-60% for tables requiring multiple index scans.

7.2 Enable Aggressive Parallel Vacuum

Leverage multiple CPU cores for dramatically faster vacuum operations:

-- System-wide settings (adjust based on available vCPUs)

ALTER SYSTEM SET max_parallel_maintenance_workers = 4;

ALTER SYSTEM SET min_parallel_index_scan_size = '256kB';

SELECT pg_reload_conf();

-- Per-table optimization for mission-critical tables

ALTER TABLE your_critical_table SET (parallel_workers = 4);

Why this works: Parallel vacuum distributes index cleanup across multiple workers. For tables with 4+ indexes, this parallelization provides substantial speedups.

Impact: 50-70% reduction in vacuum time for index-heavy tables.

7.3 Remove Autovacuum Throttling

Eliminate I/O throttling delays to let vacuum run at full speed:

-- Apply to critical tables

ALTER TABLE your_critical_table SET (

autovacuum_vacuum_cost_delay = 0,

autovacuum_vacuum_cost_limit = 10000

);

Why this works: Default throttling was designed for resource-constrained systems. With sufficient infrastructure, removing these limits allows vacuum to complete faster without impacting performance.

Impact: 30-50% faster vacuum completion with no downside on properly provisioned systems.

7.4 Tune TOAST Table Parameters

Optimize vacuum for oversized attribute storage:

ALTER TABLE your_critical_table SET (

toast.autovacuum_vacuum_cost_delay = 0,

toast.autovacuum_vacuum_threshold = 2000,

toast.autovacuum_vacuum_scale_factor = 0.05,

toast.autovacuum_vacuum_cost_limit = 3000

);

Why this works: TOAST tables accumulate dead tuples independently and are often overlooked. Aggressive TOAST vacuuming prevents hidden bloat in large text/JSON columns.

Impact: Eliminates TOAST bloat, which can represent 20-40% of total table bloat.

7.5 Lower Autovacuum Thresholds

Trigger vacuum earlier to prevent bloat accumulation:

ALTER TABLE your_critical_table SET (

autovacuum_vacuum_scale_factor = 0.05, -- Down from default 0.2

autovacuum_vacuum_threshold = 5000

);

Why this works: More frequent, smaller vacuums are faster and less disruptive than infrequent, massive cleanup operations. This prevents bloat before it impacts query performance.

Impact: Maintains bloat under 10% consistently, preventing query degradation.

7.6 Install and Configure pg_repack

Have pg_repack ready for zero-downtime space reclamation:

CREATE EXTENSION pg_repack;

Why this works: When bloat exceeds 30-40%, pg_repack reclaims space without the long exclusive locks required by VACUUM FULL. Critical tables remain online throughout the operation.

Impact: Space reclamation during business hours without downtime.

7.7 Complete Configuration Template

For a mission-critical large table on a properly sized Aurora instance:

-- Main table optimization

ALTER TABLE your_critical_table SET (

autovacuum_vacuum_scale_factor = 0.05,

autovacuum_vacuum_threshold = 5000,

autovacuum_vacuum_cost_delay = 0,

autovacuum_vacuum_cost_limit = 10000,

parallel_workers = 4,

toast.autovacuum_vacuum_cost_delay = 0,

toast.autovacuum_vacuum_threshold = 2000,

toast.autovacuum_vacuum_scale_factor = 0.05,

toast.autovacuum_vacuum_cost_limit = 3000

);

-- System-wide settings (for db.r5.2xlarge or larger)

ALTER SYSTEM SET maintenance_work_mem = '4GB';

ALTER SYSTEM SET autovacuum_work_mem = '2GB';

ALTER SYSTEM SET max_parallel_maintenance_workers = 4;

ALTER SYSTEM SET min_parallel_index_scan_size = '256kB';

SELECT pg_reload_conf();

These optimizations are “no regrets” because they:

- Require no application changes

- Leverage existing infrastructure capacity

- Provide immediate, measurable improvements

- Have minimal risk when resources are available

- Prevent problems rather than reacting to them

8.0 Conclusion

Effective vacuum management is not a one-time configuration task—it’s an ongoing optimization process that scales with your database. As your PostgreSQL Aurora tables grow, the default vacuum settings that worked initially can become a significant performance bottleneck, leading to bloat, degraded query performance, and wasted storage.

The strategies covered in this guide provide a comprehensive toolkit for managing vacuum at scale:

- Monitoring queries help you identify bloat before it impacts performance

- Table-level autovacuum tuning allows you to customize behavior for high-churn tables

- Memory configuration (

maintenance_work_mem and autovacuum_work_mem) ensures vacuum operations complete efficiently without multiple index scans

- Parallel vacuuming leverages multiple CPU cores to dramatically reduce vacuum time for large, index-heavy tables

- pg_repack offers a near-zero-downtime solution for reclaiming space from heavily bloated tables

- Automated maintenance workflows enable proactive vacuum management during off-peak hours

The key is to be proactive rather than reactive. Regularly run the monitoring queries and memory estimation scripts provided in this article. Watch for warning signs like increasing dead tuple counts, growing bloat percentages, and tables requiring multiple index scan passes. When you spot these indicators, apply targeted tuning before they escalate into production issues.

For tables with multiple indexes that take hours to vacuum, parallel vacuuming offers a game-changing performance boost—often reducing vacuum time by 50-70% by distributing index cleanup across multiple CPU cores. However, this power comes with resource trade-offs: each parallel worker consumes its own memory allocation and CPU cycles. The key is finding the sweet spot for your Aurora instance size, testing with 2-3 workers initially and scaling up based on available vCPUs and observed performance gains. This is especially valuable during maintenance windows when you need to vacuum large tables quickly without blocking operations for extended periods.

Remember that vacuum optimization is a balance: too aggressive and you risk impacting production workload; too conservative and bloat accumulates faster than it can be cleaned. Start with the conservative recommendations provided, monitor the results, and iterate based on your specific workload patterns and Aurora instance capabilities.

With the right monitoring, configuration, and tooling in place, you can maintain healthy tables even as they scale to hundreds of gigabytes—ensuring consistent query performance and optimal storage utilization for your PostgreSQL Aurora database.