The in memory data store landscape fractured in March 2024 when Redis Inc abandoned its BSD 3-clause licence in favour of the dual RSALv2/SSPLv1 model. The community response was swift and surgical: Valkey emerged as a Linux Foundation backed fork, supported by AWS, Google Cloud, Oracle, Alibaba, Tencent, and Ericsson. Eighteen months later, both projects have diverged significantly, and the choice between them involves far more than licensing philosophy.

1. The Fork That Changed Everything

When Redis Inc made its licensing move, the stated rationale was protecting against cloud providers offering Redis as a managed service without contribution. The irony was immediate. AWS and Google Cloud responded by backing Valkey with their best Redis engineers. Tencent’s Binbin Zhu alone had contributed nearly a quarter of all Redis open source commits. The technical leadership committee now has over 26 years of combined Redis experience and more than 1,000 commits to the codebase.

Redis Inc CEO Rowan Trollope dismissed the fork at the time, asserting that innovation would differentiate Redis. What he perhaps underestimated was that the innovators had just walked out the door.

By May 2025, Redis pivoted again, adding AGPLv3 as a licensing option for Redis 8. The company brought back Salvatore Sanfilippo (antirez), Redis’s original creator. The messaging was careful: “Redis as open source again.” But the damage to community trust was done. As Kyle Davis, a Valkey maintainer, stated after the September 2024 Valkey 8.0 release: “From this point forward, Redis and Valkey are two different pieces of software.”

2. Architecture: Same Foundation, Diverging Paths

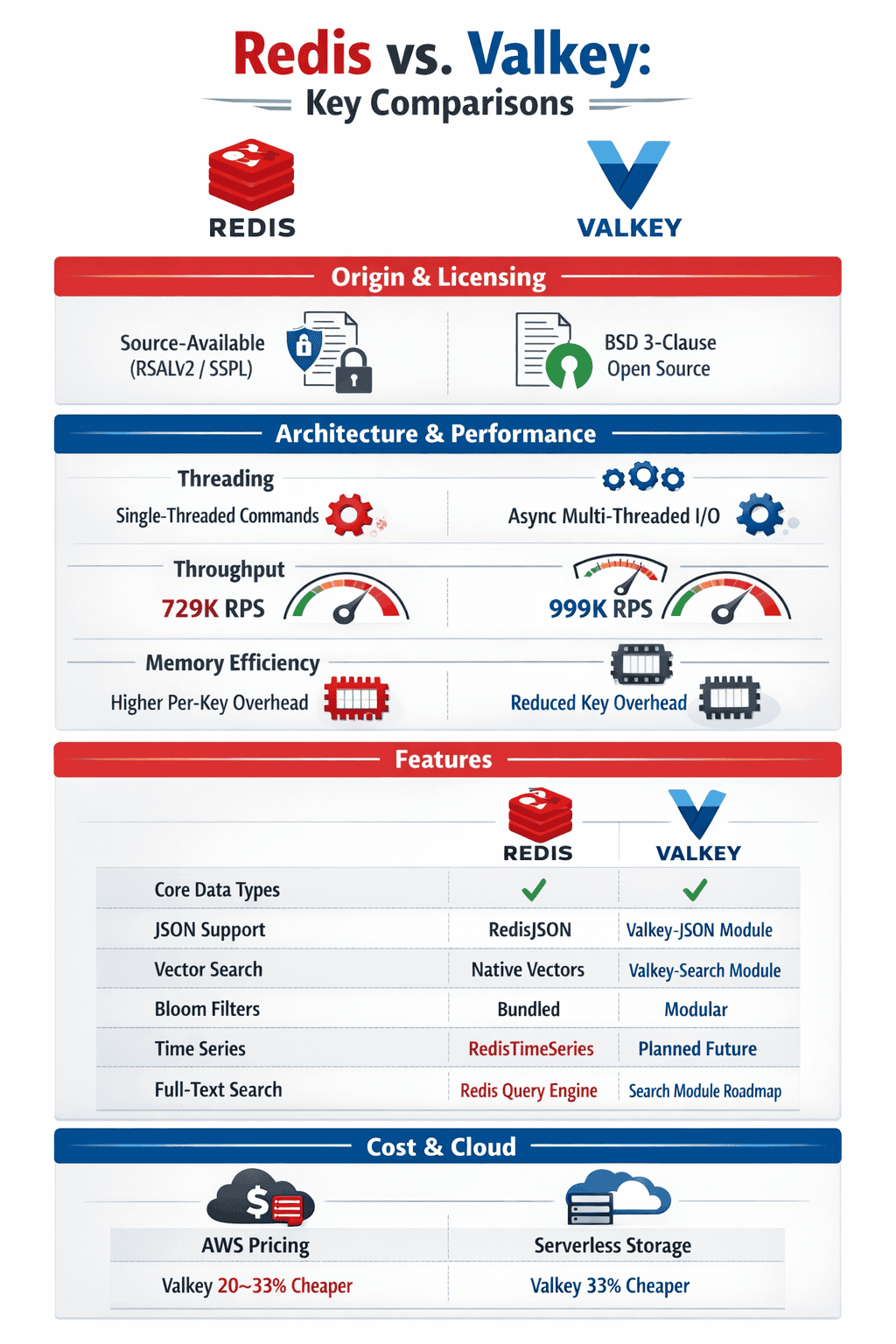

Both Redis and Valkey maintain the single threaded command execution model that ensures atomicity without locks or context switches. This architectural principle remains sacred. What differs is how each project leverages additional threads for I/O operations.

2.1 Threading Models

Redis introduced multi threaded I/O in version 6.0, offloading socket reads and writes to worker threads while keeping data manipulation single threaded. Redis 8.0 enhanced this with a new I/O threading model claiming 112% throughput improvement when setting io-threads to 8 on multi core Intel CPUs.

Valkey took a more aggressive approach. The async I/O threading implementation in Valkey 8.0, contributed primarily by AWS engineers, allows the main thread and I/O threads to operate concurrently. The I/O threads handle reading, parsing, writing responses, polling for I/O events, and even memory deallocation. The main thread orchestrates jobs to I/O threads while executing commands, and the number of active I/O threads adjusts dynamically based on load.

The results speak clearly. On AWS Graviton r7g instances, Valkey 8.0 achieves 1.2 million queries per second compared to 380K QPS in Valkey 7.2 (a 230% improvement). Independent benchmarks from Momento on c8g.2xlarge instances (8 vCPU) showed Valkey 8.1.1 reaching 999.8K RPS on SET operations with 0.8ms p99 latency, while Redis 8.0 achieved 729.4K RPS with 0.99ms p99 latency. That is 37% higher throughput on writes and 60%+ faster p99 latencies on reads.

2.2 Memory Efficiency

Valkey 8.0 introduced a redesigned hash table implementation that reduces memory overhead per key. The 8.1 release pushed further, observing roughly 20 byte reduction per key-value pair for keys without TTL, and up to 30 bytes for keys with TTL. For a dataset with 50 million keys, that translates to roughly 1GB of saved memory.

Redis 8.0 counters with its own optimisations, but the Valkey improvements came from engineers intimately familiar with the original Redis codebase. Google Cloud’s benchmarks show Memorystore for Valkey 8.0 achieving 2x QPS at microsecond latency compared to Memorystore for Redis Cluster.

3. Feature Comparison

3.1 Core Data Types

Both support the standard Redis data types: strings, hashes, lists, sets, sorted sets, streams, HyperLogLog, bitmaps, and geospatial indexes. Both support Lua scripting and Pub/Sub messaging.

3.2 JSON Support

Redis 8.0 bundles RedisJSON (previously a separate module) directly into core, available under the AGPLv3 licence. This provides native JSON document storage with partial updates and JSONPath queries.

Valkey responded with valkey-json, an official module compatible with Valkey 8.0 and above. As of Valkey 8.1, JSON support is production-ready through the valkey bundle container that packages valkey-json 1.0, valkey-bloom 1.0, valkey-search 1.0, and valkey-ldap 1.0 together.

3.3 Vector Search

This is where the AI workload story becomes interesting.

Redis 8.0 introduced vector sets as a new native data type, designed by Sanfilippo himself. It provides high dimensional similarity search directly in core, positioning Redis for semantic search, RAG pipelines, and recommendation systems.

Valkey’s approach is modular. valkey-search provides KNN and HNSW approximate nearest neighbour algorithms, capable of searching billions of vectors with millisecond latencies and over 99% recall. Google Cloud contributed their vector search module to the project, and it is now the official search module for Valkey OSS. Memorystore for Valkey can perform vector search at single digit millisecond latency on over a billion vectors.

The architectural difference matters. Redis embeds vector capability in core; Valkey keeps it modular. For organisations wanting a smaller attack surface or not needing vector search, Valkey’s approach offers more control.

3.4 Probabilistic Data Structures

Both now offer Bloom filters. Redis bundles RedisBloom in core; Valkey provides valkey-bloom as a module. Bloom filters use roughly 98% less memory than traditional sets for membership testing with an acceptable false positive rate.

3.5 Time Series

Redis 8.0 bundles RedisTimeSeries in core. Valkey does not yet have a native time series module, though the roadmap indicates interest.

3.6 Search and Query

Redis 8.0 includes the Redis Query Engine (formerly RediSearch), providing secondary indexing, full-text search, and aggregation capabilities.

Valkey search currently focuses on vector search but explicitly states its goal is to extend Valkey into a full search engine supporting full-text search. This is roadmap, not shipped product, as of late 2025.

4. Licensing: The Uncomfortable Conversation

4.1 Redis Licensing (as of Redis 8.0)

Redis now offers a tri licence model: RSALv2, SSPLv1, and AGPLv3. Users choose one.

AGPLv3 is OSI approved open source, but organisations often avoid it due to its copyleft requirements. If you modify Redis and offer it as a network service, you must release your modifications. Many enterprise legal teams treat AGPL as functionally similar to proprietary for internal use policies.

RSALv2 and SSPLv1 are source available but not open source by OSI definition. Both restrict offering Redis as a managed service without licensing arrangements.

The practical implication: most enterprises consuming Redis 8.0 will either use it unmodified (which sidesteps AGPL concerns) or license Redis commercially.

4.2 Valkey Licensing

Valkey remains BSD 3-clause. Full stop. You can fork it, modify it, commercialise it, and offer it as a managed service without restriction. This is why AWS, Google Cloud, Oracle, and dozens of others are building their managed offerings on Valkey.

For financial services institutions subject to regulatory scrutiny around software licensing, Valkey’s licence clarity is a non-trivial advantage.

5. Commercial Considerations: AWS Reference Pricing

AWS has made its position clear through pricing. The discounts for Valkey versus Redis OSS are substantial and consistent across services.

5.1 Annual Cost Comparison by Cluster Size

The following table shows annual costs for typical ElastiCache node-based deployments in us-east-1 using r7g Graviton3 instances. All configurations assume high availability with one replica per shard. Pricing reflects on-demand rates; reserved instances reduce costs further but maintain the same 20% Valkey discount.

Small Cluster (Development/Small Production)

Configuration: 1 shard, 1 primary + 1 replica = 2 nodes

Node type: cache.r7g.large (13.07 GiB memory, 2 vCPU)

Effective capacity: ~10GB after 25% reservation for overhead

| Engine | Hourly/Node | Monthly | Annual | Savings |

|---|---|---|---|---|

| Redis OSS | $0.274 | $400 | $4,800 | — |

| Valkey | $0.219 | $320 | $3,840 | $960/year |

Medium Cluster (Production Workload)

Configuration: 3 shards, 1 primary + 1 replica each = 6 nodes

Node type: cache.r7g.xlarge (26.32 GiB memory, 4 vCPU)

Effective capacity: ~60GB after 25% reservation

| Engine | Hourly/Node | Monthly | Annual | Savings |

|---|---|---|---|---|

| Redis OSS | $0.437 | $1,914 | $22,968 | — |

| Valkey | $0.350 | $1,533 | $18,396 | $4,572/year |

Large Cluster (High Traffic Production)

Configuration: 6 shards, 1 primary + 1 replica each = 12 nodes

Node type: cache.r7g.2xlarge (52.82 GiB memory, 8 vCPU)

Effective capacity: ~240GB after 25% reservation

| Engine | Hourly/Node | Monthly | Annual | Savings |

|---|---|---|---|---|

| Redis OSS | $0.873 | $7,647 | $91,764 | — |

| Valkey | $0.698 | $6,115 | $73,380 | $18,384/year |

XL Cluster (Enterprise Scale)

Configuration: 12 shards, 1 primary + 2 replicas each = 36 nodes

Node type: cache.r7g.4xlarge (105.81 GiB memory, 16 vCPU)

Effective capacity: ~950GB after 25% reservation

Throughput: 500M+ requests/second capability

| Engine | Hourly/Node | Monthly | Annual | Savings |

|---|---|---|---|---|

| Redis OSS | $1.747 | $45,918 | $551,016 | — |

| Valkey | $1.398 | $36,743 | $440,916 | $110,100/year |

Serverless Comparison (Variable Traffic)

For serverless deployments, the 33% discount on both storage and compute makes the differential even more pronounced at scale.

| Workload | Storage | Requests/sec | Redis OSS/year | Valkey/year | Savings |

|---|---|---|---|---|---|

| Small | 5GB | 10,000 | $5,475 | $3,650 | $1,825 |

| Medium | 25GB | 50,000 | $16,350 | $10,900 | $5,450 |

| Large | 100GB | 200,000 | $54,400 | $36,250 | $18,150 |

| XL | 500GB | 1,000,000 | $233,600 | $155,700 | $77,900 |

Note: Serverless calculations assume simple GET/SET operations (1 ECPU per request) with sub-1KB payloads. Complex operations on sorted sets or hashes consume proportionally more ECPUs.

5.2 Memory Efficiency Multiplier

The above comparisons assume identical node sizing, but Valkey 8.1’s memory efficiency improvements often allow downsizing. AWS documented a real customer case where upgrading from ElastiCache for Redis OSS to Valkey 8.1 reduced memory usage by 36%, enabling a downgrade from r7g.xlarge to r7g.large nodes. Combined with the 20% engine discount, total savings reached 50%.

For the Large Cluster example above, if memory efficiency allows downsizing from r7g.2xlarge to r7g.xlarge:

| Scenario | Configuration | Annual Cost | vs Redis OSS Baseline |

|---|---|---|---|

| Redis OSS (baseline) | 12× r7g.2xlarge | $91,764 | — |

| Valkey (same nodes) | 12× r7g.2xlarge | $73,380 | -20% |

| Valkey (downsized) | 12× r7g.xlarge | $36,792 | -60% |

This 60% saving reflects real-world outcomes when combining engine pricing with memory efficiency gains.

5.3 ElastiCache Serverless

ElastiCache Serverless charges for data storage (GB-hours) and compute (ElastiCache Processing Units or ECPUs). One ECPU covers 1KB of data transferred for simple GET/SET operations. More complex commands like HMGET consume ECPUs proportional to vCPU time or data transferred, whichever is higher.

In us-east-1, ElastiCache Serverless for Valkey prices at $0.0837/GB-hour for storage and $0.00227/million ECPUs. ElastiCache Serverless for Redis OSS prices at $0.125/GB-hour for storage and $0.0034/million ECPUs. That is 33% lower on storage and 33% lower on compute for Valkey.

The minimum metered storage is 100MB for Valkey versus 1GB for Redis OSS. This enables Valkey caches starting at $6/month compared to roughly $91/month for Redis OSS.

For a reference workload of 10GB average storage and 50,000 requests/second (simple GET/SET, sub-1KB payloads), the monthly cost breaks down as follows. Storage runs 10 GB × $0.0837/GB-hour × 730 hours = $611/month for Valkey versus $912.50/month for Redis OSS. Compute runs 180 million ECPUs/hour × 730 hours × $0.00227/million = $298/month for Valkey versus $446/month for Redis OSS. Total monthly cost is roughly $909 for Valkey versus $1,358 for Redis OSS, a 33% saving.

5.4 ElastiCache Node Base

For self managed clusters where you choose instance types, Valkey is priced 20% lower than Redis OSS across all node types.

A cache.r7g.xlarge node (4 vCPU, 26.32 GiB memory) in us-east-1 costs $0.449/hour for Valkey versus $0.561/hour for Redis OSS. Over a month, that is $328 versus $410 per node. For a cluster with 6 nodes (3 shards, 1 replica each), annual savings reach $5,904.

Reserved nodes offer additional discounts (up to 55% for 3 year all upfront) on top of the Valkey pricing advantage. Critically, if you hold Redis OSS reserved node contracts and migrate to Valkey, your reservations continue to apply. You simply receive 20% more value from them.

5.5 MemoryDB

Amazon MemoryDB, the durable in-memory database with multi AZ persistence, follows the same pattern. MemoryDB for Valkey is 30% lower on instance hours than MemoryDB for Redis OSS.

A db.r6g.xlarge node in us-west-2 costs $0.432/hour for Valkey versus approximately $0.617/hour for Redis OSS. For a typical HA deployment (1 shard, 1 primary, 1 replica), monthly costs run $631 for Valkey versus $901 for Redis OSS.

MemoryDB for Valkey also eliminates data written charges up to 10TB/month. Above that threshold, pricing is $0.04/GB, which is 80% lower than MemoryDB for Redis OSS.

5.6 Data Tiering Economics

For workloads with cold data that must remain accessible, ElastiCache and MemoryDB both support data tiering on r6gd node types. This moves infrequently accessed data from memory to SSD automatically.

A db.r6gd.4xlarge with data tiering can store 840GB total (approximately 105GB in memory, 735GB on SSD) at significantly lower cost than pure in-memory equivalents. For compliance workloads requiring 12 months of data retention, this can reduce costs by 52.5% compared to fully in memory configurations while maintaining low millisecond latencies for hot data.

5.7 Scaling Economics

ElastiCache Serverless for Valkey 8.0 scales dramatically faster than 7.2. In AWS benchmarks, scaling from 0 to 5 million RPS takes under 13 minutes on Valkey 8.0 versus 50 minutes on Valkey 7.2. The system doubles supported RPS every 2 minutes versus every 10 minutes.

For burst workloads, this faster scaling means lower peak latencies. The p99 latency during aggressive scaling stays under 8ms for Valkey 8.0 versus potentially spiking during the longer scaling windows of earlier versions.

5.8 Migration Economics

AWS provides zero-downtime, in place upgrades from ElastiCache for Redis OSS to ElastiCache for Valkey. The process is a few clicks in the console or a single CLI command:

aws elasticache modify-replication-group \

--replication-group-id my-cluster \

--engine valkey \

--engine-version 8.0Your reserved node pricing carries over, and you immediately begin receiving the 20% discount on node based clusters or 33% discount on serverless. There is no migration cost beyond the time to validate application compatibility.

5.9 Total Cost of Ownership

For an enterprise running 100GB across 10 ElastiCache clusters with typical caching workloads, the annual savings from Redis OSS to Valkey are substantial:

Serverless scenario (10 clusters, 10GB each, 100K RPS average per cluster): roughly $109,000/year on Valkey versus $163,000/year on Redis OSS, saving $54,000 annually.

Node-based scenario (10 clusters, cache.r7g.2xlarge, 3 shards + 1 replica each): roughly $315,000/year on Valkey versus $394,000/year on Redis OSS, saving $79,000 annually.

These numbers exclude operational savings from faster scaling, lower latencies reducing retry logic, and memory efficiency improvements allowing smaller node selections.

6. Google Cloud and Azure Considerations

Google Cloud Memorystore for Valkey is generally available with a 99.99% SLA. Committed use discounts offer 20% off for one-year terms and 40% off for three-year terms, fungible across Memorystore for Valkey, Redis Cluster, Redis, and Memcached. Google was first to market with Valkey 8.0 as a managed service.

Azure offers Azure Cache for Redis as a managed service, based on licensed Redis rather than Valkey. Microsoft’s agreement with Redis Inc means Azure customers do not currently have a Valkey option through native Azure services. For Azure-primary organisations wanting Valkey, options include self-managed deployments on AKS or multi-cloud architectures leveraging AWS or GCP for caching.

Redis Cloud (Redis Inc’s managed offering) operates across AWS, GCP, and Azure with consistent pricing. Commercial quotes are required for production workloads, making direct comparison difficult, but the pricing does not include the aggressive discounting that cloud providers apply to Valkey.

7. Third Party Options

Upstash offers a true pay-per-request serverless Redis-compatible service at $0.20 per 100K requests plus $0.25/GB storage. For low-traffic applications (under 1 million requests/month with 1GB storage), Upstash costs roughly $2.25/month versus $91+/month for ElastiCache Serverless Redis OSS. Upstash also provides a REST API for environments where TCP is restricted, such as Cloudflare Workers.

Dragonfly, KeyDB, and other Redis-compatible alternatives exist but lack the cloud provider backing and scale validation that Valkey has demonstrated.

8. Decision Framework

8.1 Choose Valkey When

Licensing clarity matters. BSD 3-clause eliminates legal review friction.

Raw throughput is paramount. 37% higher write throughput, 60%+ lower read latency.

Memory efficiency counts. 20-30 bytes per key adds up at scale.

Cloud provider alignment matters. AWS and GCP are betting their managed services on Valkey.

Cost optimisation is a priority. 20-33% lower pricing on major cloud platforms with zero-downtime migration paths.

Traditional use cases dominate. Caching, session stores, leaderboards, queues.

8.2 Choose Redis When

Vector search must be native. Redis 8’s vector sets are core, not modular.

Time series is critical. RedisTimeSeries in core has no Valkey equivalent today.

Full-text search is needed now. Redis Query Engine ships; Valkey’s is roadmap.

Existing Redis Enterprise investment exists. Redis Software/Redis Cloud with enterprise support, LDAP, RBAC already deployed.

Following the original creator’s technical direction has value. Antirez is back at Redis.

8.3 Choose Managed Serverless When

Traffic is unpredictable. ElastiCache Serverless scales automatically.

Ops overhead must be minimal. No node sizing, patching, or capacity planning.

Low-traffic applications dominate. Valkey’s $6/month minimum versus $91+ for Redis OSS on ElastiCache Serverless.

Multi-region requirements exist. Managed services handle replication complexity.

9. Production Tuning Notes

9.1 Valkey I/O Threading

Enable with io-threads N in configuration. Start with core count minus 2 for the I/O thread count. The system dynamically adjusts active threads based on load, so slight overprovisioning is safe.

For TLS workloads, Valkey 8.1 offloads TLS negotiation to I/O threads, improving new connection rates by roughly 300%.

9.2 Memory Defragmentation

Valkey 8.1 reduced active defrag cycle time to 500 microseconds with anti-starvation protection. This eliminates the historical issue of 1ms+ latency spikes during defragmentation.

9.3 Cluster Scaling

Valkey 8.0 introduced automatic failover for empty shards and replicated migration states. During slot movement, cluster consistency is maintained even through node failures. This was contributed by Google and addresses real production pain from earlier Redis cluster implementations.

10. The Verdict

The Redis fork has produced genuine competition for the first time in the in-memory data store space. Valkey is not merely a “keep the lights on” maintenance fork. It is evolving faster than Redis in core performance characteristics, backed by engineers who wrote much of the original Redis codebase, and supported by the largest cloud providers.

For enterprise architects, the calculus is increasingly straightforward. Unless you have specific dependencies on Redis 8’s bundled modules (particularly time series or full-text search), Valkey offers superior performance, clearer licensing, and lower costs on managed platforms.

The commercial signals are unambiguous. AWS prices Valkey 20-33% below Redis OSS on ElastiCache and 30% below on MemoryDB. Reserved node contracts transfer seamlessly. Migration is zero-downtime. The incentive structure points one direction.

The Redis licence changes in 2024 were intended to monetise cloud provider usage. Instead, they unified the cloud providers behind an alternative that is now outperforming the original. The return to AGPLv3 in 2025 acknowledges the strategic error, but the community momentum has shifted.

Redis is open source again. But the community that made it great is building Valkey.