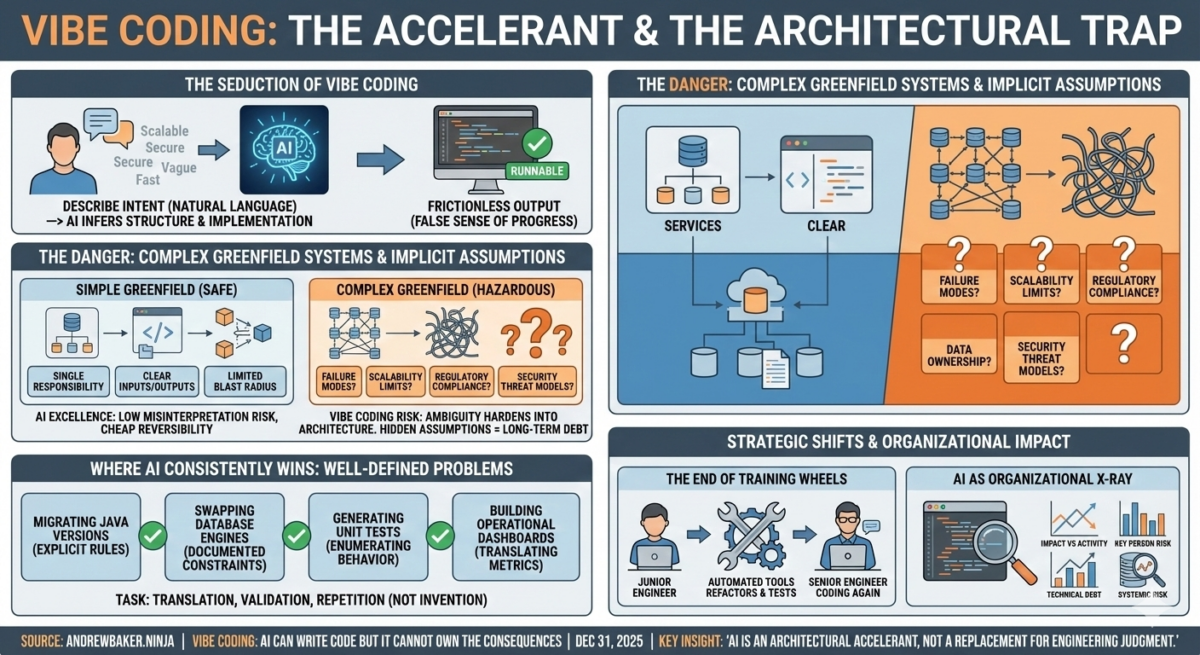

AI is a powerful accelerator when problems are well defined and bounded, but in complex greenfield systems vague intent hardens into architecture and creates long term risk that no amount of automation can undo.

1. What Vibe Coding Really Is

Vibe coding is the practice of describing intent in natural language and allowing AI to infer structure, logic, and implementation directly from that description. It is appealing because it feels frictionless. You skip formal specifications, you skip design reviews, and you skip the uncomfortable work of forcing vague ideas into precise constraints. You describe what you want and something runnable appears.

The danger is that human language is not executable. It is contextual, approximate, and filled with assumptions that are never stated. When engineers treat language as if it were a programming language they are pretending ambiguity does not exist. AI does not remove that ambiguity. It simply makes choices on your behalf and hides those choices behind confident output.

This creates a false sense of progress. Code exists, tests may even pass, and demos look convincing. But the hardest decisions have not been made, they have merely been deferred and embedded invisibly into the system.

2. Language Is Not Logic And Never Was

Dave Varley has consistently highlighted that language evolved for human conversation, not for deterministic execution. Humans resolve ambiguity through shared context, interruption, and correction. Machines do not have those feedback loops. When you say make this scalable or make this secure you are not issuing instructions, you are expressing intent without constraints.

Scalable might mean high throughput, burst tolerance, geographic distribution, or cost efficiency. Secure might mean basic authentication or resilience against a motivated attacker. AI must choose one interpretation. It will do so based on statistical patterns in its training data, not on your business reality. That choice is invisible until the system is under stress.

At that point the system will behave correctly according to the wrong assumptions. This is why translating vague language into production systems is inherently hazardous. The failure mode is not obvious bugs, it is systemic misalignment between what the business needs and what the system was implicitly built to optimise.

3. Where Greenfield AI Coding Breaks Down And Where It Is Perfectly Fine

It is important to be precise. The risk is not greenfield work itself. The risk is complex greenfield systems, where ambiguity, coupling, and long lived architectural decisions matter. Simple greenfield services that are isolated, well bounded, and easily unitisable are often excellent candidates for AI assisted generation.

Problems arise when teams treat all greenfield work as equal.

Complex greenfield systems are those where early decisions define the operational, regulatory, and scaling envelope for years. These systems require intentional design because small assumptions compound over time and become expensive or impossible to reverse. In these environments relying on vibe coding is dangerous because there is no existing behaviour to validate against and no production history to expose incorrect assumptions.

Complex greenfield systems require explicit decisions on concerns that natural language routinely hides, including:

- Failure modes and recovery strategies across services

- Scalability limits and saturation behaviour under load

- Regulatory, audit, and compliance obligations

- Data ownership, retention, and deletion semantics

- Observability requirements and operational accountability

- Security threat models and trust boundaries

When these concerns are not explicitly designed they are implicitly inferred by the AI. Those inferences become embedded in code paths, schemas, and runtime behaviour. Because they were never articulated they were never reviewed. This creates architectural debt at inception. The system may pass functional tests yet fail under real world pressure where those hidden assumptions no longer hold.

By contrast, simple greenfield services behave very differently. Small services with a single responsibility, minimal state, clear inputs and outputs, and a limited blast radius are often ideal for AI assisted generation. If a service can be fully described by its interface, exhaustively unit tested, and replaced without systemic impact, then misinterpretation risk is low and correction cost is small.

AI works well when reversibility is cheap. It becomes hazardous when ambiguity hardens into architecture.

4. Where AI Clearly Wins Because the Problem Is Defined

AI excels when the source state exists and the target state is known. In these cases the task is not invention but translation, validation, and repetition. This is where AI consistently outperforms humans.

4.1 Migrating Java Versions

Java version migrations are governed by explicit rules. APIs are deprecated, removed, or replaced in documented ways. Behavioural changes are known and testable. AI can scan entire codebases across hundreds of repositories, identify incompatible constructs, refactor them consistently, and generate validation tests.

Humans are slow and inconsistent at this work because it is repetitive and detail heavy. AI does not get bored and does not miss edge cases. What used to take months of coordinated effort is increasingly a one click, multi repository transformation.

4.2 Swapping Database Engines

Database engine migrations are another area where constraints are well understood. SQL dialect differences, transactional semantics, and indexing behaviour are documented. AI can rewrite queries, translate stored procedures, flag unsupported features, and generate migration tests that prove equivalence.

Humans historically learned databases by doing this work manually. That learning value still exists, but the labour component no longer makes economic sense. AI performs the translation faster, more consistently, and with fewer missed edge cases.

4.3 Generating Unit Tests

Unit testing is fundamentally about enumerating behaviour. Given existing code, AI can infer expected inputs, outputs, and edge cases. It can generate tests that cover boundary conditions, null handling, and error paths that humans often skip due to time pressure.

This raises baseline quality dramatically and frees engineers to focus on defining correctness rather than writing boilerplate.

4.4 Building Operational Dashboards

Operational dashboards translate metrics into insight. The important signals are well known: latency, error rates, saturation, and throughput. AI can identify which metrics matter, correlate signals across services, and generate dashboards that focus on tail behaviour rather than averages.

The result is dashboards that are useful during incidents rather than decorative artifacts.

5. The End of Engineering Training Wheels

Many tasks that once served as junior engineering work are now automated. Refactors, migrations, test generation, and dashboard creation were how engineers built intuition. That work still needs to be understood, but it no longer needs to be done manually.

This changes team dynamics. Senior engineers are coding again because AI removes the time cost of boilerplate. When the yield of time spent writing code improves, experienced engineers re engage with implementation and apply judgment where it actually matters.

The industry now faces a structural challenge. The old apprenticeship path is gone, but the need for deep understanding remains. Organisations that fail to adapt their talent models will feel this gap acutely.

6. AI As an Organisational X Ray

AI is also transforming how organisations understand themselves. By scanning all repositories across a company, AI can rank contributions by real impact rather than activity volume. It can identify where knowledge is concentrated in individuals, exposing key person risk. It can quantify technical debt and price remediation effort so leadership can see risk in economic terms.

It can also surface scaling choke points and cyber weaknesses that manual reviews often miss. This removes plausible deniability. Technical debt and systemic risk become visible and measurable whether the organisation is comfortable with that or not.

7. The Cardinal Sin of AI Operations And Why It Breaks Production

AI driven operations can be powerful, but only under strict architectural conditions. The most dangerous mistake teams make is allowing AI tools to interact directly with live transactional systems that use pessimistic locking and have no read replicas.

Pessimistic locks exist to protect transactional integrity. When a transaction holds a lock it blocks other reads or writes until the lock is released. An AI system that continuously probes production tables for insight can unintentionally extend lock duration or introduce poorly sequenced queries. This leads to deadlocks, where transactions block each other indefinitely, and to increased contention that slows down write throughput for real customer traffic.

The impact is severe. Production write latency increases, customer facing operations slow down, and in worst cases the system enters cascading failure as retries amplify contention. This is not theoretical. It is a predictable outcome of mixing analytical exploration with locked OLTP workloads.

AI operational tooling should only ever interact with systems that have:

- Real time read replicas separated from write traffic

- No impact on transactional locking paths

- The ability to support heterogeneous indexing

Heterogeneous indexing allows different replicas to optimise for different query patterns without affecting write performance. This is where AI driven analytics becomes safe and effective. Without these properties, AI ops is not just ineffective, it is actively dangerous.

8. Conclusion Clarity Over Vibes

AI is an extraordinary force multiplier, but it does not absolve engineers of responsibility. Vibe coding feels productive because it hides complexity. In complex greenfield systems that hidden complexity becomes long term risk.

Where AI shines is in transforming known systems, automating mechanical work, and exposing organisational reality. It enables senior engineers to code again and forces businesses to confront technical debt honestly.

AI is not a replacement for engineering judgment. It is an architectural accelerant. When intent is clear, constraints are explicit, and blast radius is contained, AI dramatically increases leverage. When intent is vague and architecture is implicit, AI fossilises early mistakes at machine speed.

The organisations that win will not be those that let AI think for them, but those that use it to execute clearly articulated decisions faster and more honestly than their competitors ever could.